Information Extraction from Documents using the Trustworthy Language Model

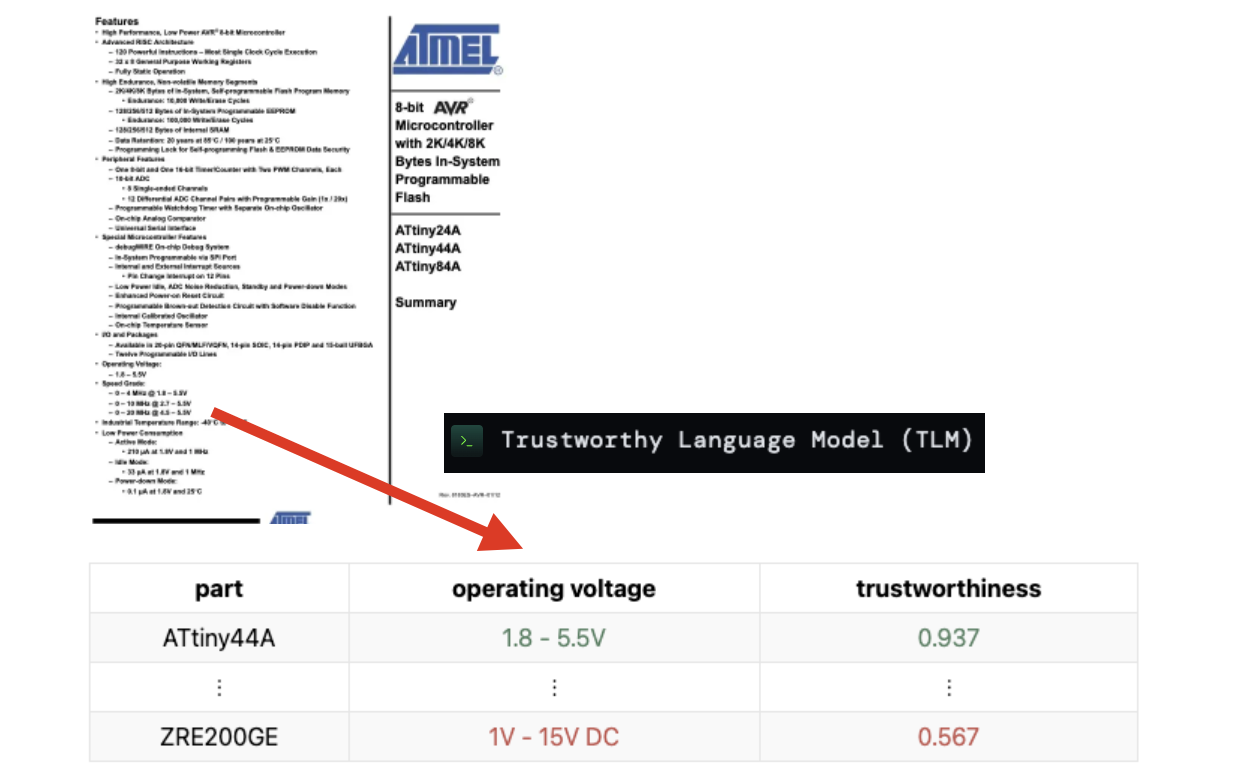

This tutorial demonstrates how to use Cleanlab’s Trustworthy Language Model (TLM) to reliably extract data from unstructured text documents.

You can use TLM like any other LLM, just prompt it with the document text provided as context along with an instruction detailing what information should be extracted and in what format. While today’s GenAI and LLMs demonstrate promise for such information extraction, the technology remains fundamentally unreliable. LLMs may extract completely wrong (hallucinated) values in certain edge-cases, but you won’t know with existing LLMs. Cleanlab’s TLM also provides a trustworthiness score alongside the extracted information to indicate how confident we can be regarding its accuracy. TLM trustworthiness scores offer state-of-the-art automation to catch badly extracted information before it harms downstream processes.

Setup

This tutorial requires a TLM API key. Get one here, and first complete the quickstart tutorial.

The Python packages required for this tutorial can be installed using pip:

apt-get -qq install poppler-utils # Required for PDF processing with unstructured library

%pip install --upgrade cleanlab-tlm "unstructured[pdf]==0.18.15"

# Set your API key

import os

os.environ["CLEANLAB_TLM_API_KEY"] = "<API key>" # Get your free API key from: https://tlm.cleanlab.ai/

import pandas as pd

from unstructured.partition.pdf import partition_pdf

from IPython.display import display, IFrame

from cleanlab_tlm import TLM

pd.set_option('display.max_colwidth', None)

Document Dataset

The ideas demonstrated in this tutorial apply to arbitrary information extracation tasks involving any types of documents. The particular documents demonstrated in this tutorial are a collection of datasheets for electronic parts, stored in PDF format. These datasheets contain technical specifications and application guidelines regarding the electronic products, serving as an important guide for users of these products. Let’s download the data and look at an example datasheet.

wget -nc https://cleanlab-public.s3.amazonaws.com/Datasets/electronics-datasheets/electronics-datasheets.zip

mkdir datasheets/

unzip -q electronics-datasheets.zip -d datasheets/

Here’s the first page of a sample datasheet (13.pdf):

This document contains many details, so it might be tough to extract one particular piece of information. Datasheets may use complex and highly technical language, while also varying in their structure and organization of information.

Hence, we’ll use the Trustworthy Language Model (TLM) to automatically extract key information about each product from these datasheets. TLM also provides a trustworthiness score to quantify how confident we can be that the right information was extracted, which allows us to catch potential errors in this process at scale.

Convert PDF Documents to Text

Our documents are PDFs, but TLM requires text inputs (like any other LLM). We can use the open-source unstructured library to extract the text from each PDF.

import nltk

nltk.download('punkt_tab')

nltk.download('averaged_perceptron_tagger_eng')

def extract_text_from_pdf(filename):

elements = partition_pdf(filename, languages=["en"])

text = "\n".join([str(el) for el in elements])

return text

directory = "datasheets/"

all_files = os.listdir(directory)

pdf_files = sorted([file for file in all_files if file.endswith('.pdf')])

pdf_texts = [extract_text_from_pdf(os.path.join(directory, file)) for file in pdf_files]

Here is a sample of the extracted text:

print(pdf_texts[0])

Extract Information using TLM

Let’s initalize TLM, here using default configuration settings.

tlm = TLM() # See Advanced Tutorial for optional TLM configurations to get better/faster results

We’ll use the following prompt template that instructs our model to extract the operating voltage of each electronics product from its datasheet:

Please reference the provided datasheet to determine the operating voltage of the item.

Respond in the following format: "Operating Voltage: [insert appropriate voltage range]V [AC/DC]" with the appropriate voltage range and indicating "AC" or "DC" if applicable, or omitting if not.

If the operating voltage is a range, write it as "A - B" with "-" between the values.

If the operating voltage information is not available, specify "Operating Voltage: N/A".

Datasheet: <insert-datasheet>

Some of these datasheets are very long (over 50 pages), and TLM might not have the ability to ingest large inputs (contact us if you require larger context windows: sales@cleanlab.ai). For this tutorial, we’ll limit the input datasheet text to only the first 10,000 characters. Most datasheets summarize product technical details in the first few pages, so this should not be an issue for our information extraction task. If you already know roughly where in your documents the relevant information lies, you can save cost and runtime by only including text from the relevant part of the document in your prompts rather than the whole thing.

prompt_template = """Please reference the provided datasheet to determine the operating voltage of the item.

Respond in the following format: "Operating Voltage: [insert appropriate voltage range]V [AC/DC]" with the appropriate voltage range and indicating "AC" or "DC" if applicable, or omitting if not.

If the operating voltage is a range, write it as "A - B" with "-" between the values.

If the operating voltage information is not available, specify "Operating Voltage: N/A".

Datasheet:

"""

texts_with_prompt = [prompt_template + text[:10000] for text in pdf_texts]

After forming our prompts for each datasheet, let’s generate LLM responses using these prompts. Here we use TLM.prompt() to both generate responses and score their trustworthiness, but you can alternatively use any LLM to generate responses and TLM.get_trustworthiness_score() to score their trustworthiness.

tlm_response = tlm.prompt(texts_with_prompt) # run all prompts at once in batch-mode

Let’s organize the extracted information from the LLM and trustworthiness scores, along with the filename of each document.

results_df = pd.DataFrame({

"filename": pdf_files,

"response": [d["response"] for d in tlm_response],

"trustworthiness_score": [d["trustworthiness_score"] for d in tlm_response]

})

results_df.head()

| filename | response | trustworthiness_score | |

|---|---|---|---|

| 0 | 1.pdf | Operating Voltage: 5.5V DC | 0.987320 |

| 1 | 10.pdf | Operating Voltage: 2.7 - 5.5V DC | 0.992886 |

| 2 | 11.pdf | Operating Voltage: 1.8 - 5.5V | 0.977994 |

| 3 | 12.pdf | Operating Voltage: 0.9 - 1.6V DC | 0.991136 |

| 4 | 13.pdf | Operating Voltage: 1.8 - 5.5V | 0.985581 |

Examine Results

The LLM has extracted the product’s operating voltage from each datasheet, and we have a trustworthiness_score indicating how confident we can be that the LLM output is correct.

High Trustworthiness Scores

The responses with the highest trustworthiness scores represent datasheets where we can be the most confident that the LLM has accurately extracted the product’s operating voltage from its datasheet document.

results_df.sort_values("trustworthiness_score", ascending=False).head()

| filename | response | trustworthiness_score | |

|---|---|---|---|

| 48 | 53.pdf | Operating Voltage: 2.2 - 3.6V DC | 0.998998 |

| 11 | 2.pdf | Operating Voltage: 10 - 30V DC | 0.998991 |

| 26 | 33.pdf | Operating Voltage: 1.62 - 3.6V DC | 0.998701 |

| 22 | 3.pdf | Operating Voltage: 2.4 - 3.6V DC | 0.998617 |

| 44 | 5.pdf | Operating Voltage: 4.5 - 9V DC | 0.998272 |

Let’s look at an example response with one of our highest trustworthiness scores.

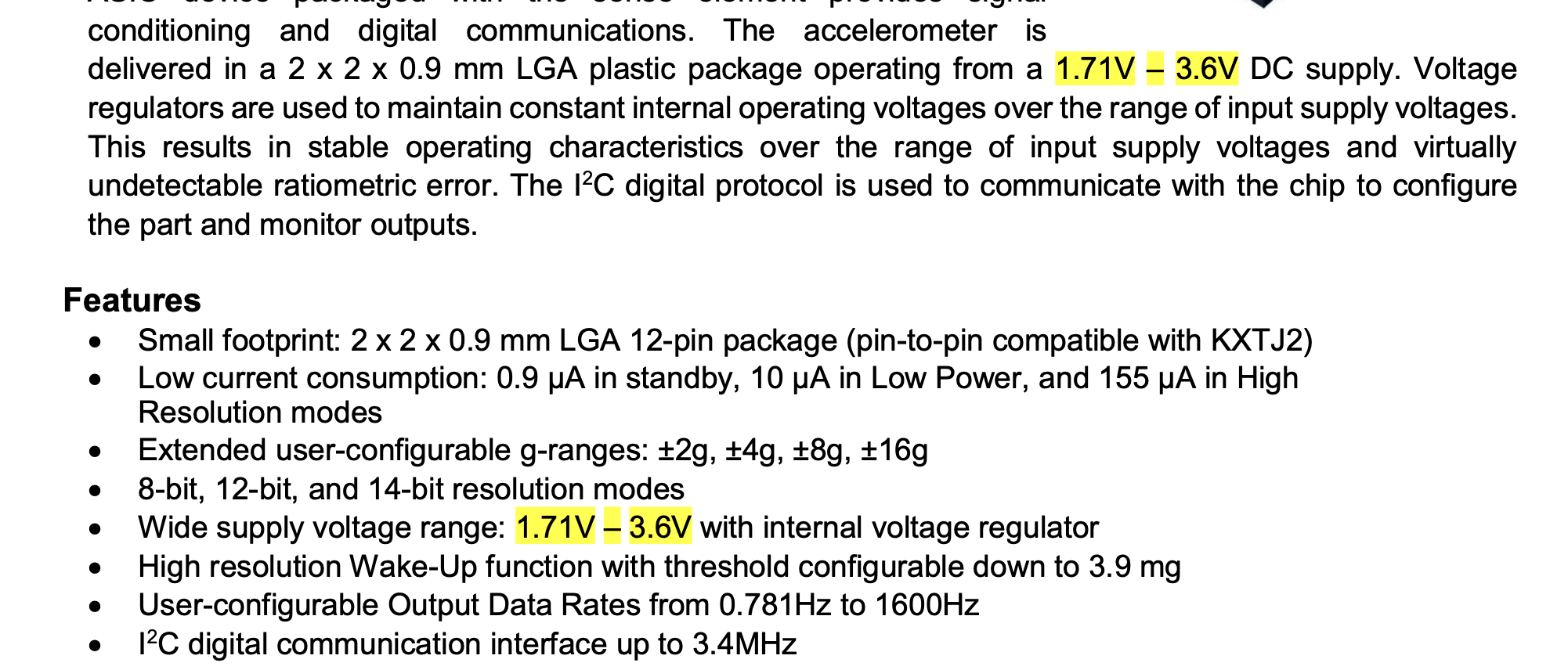

results_df.loc[results_df["filename"] == "31.pdf"]

| filename | response | trustworthiness_score | |

|---|---|---|---|

| 24 | 31.pdf | Operating Voltage: 1.71 - 3.6V DC | 0.993152 |

Below we show part of a datasheet for which the LLM extraction received a high trustworthiness score, document 31.pdf.

From this image (you can also find this on the page 1 in the original file: 31.pdf), we see it clearly specifies the operating voltage range to be 1.71 VDC to 3.6 VDC. This matches the LLM’s extracted information. High trustworthiness scores help you know which LLM outputs you can be confident in!

Low Trustworthiness Scores

The responses with the lowest trustworthiness scores represent datasheets where you should be least confident in the LLM extractions.

results_df.sort_values("trustworthiness_score").head()

| filename | response | trustworthiness_score | |

|---|---|---|---|

| 25 | 32.pdf | Operating Voltage: 3.3V DC | 0.331792 |

| 51 | 56.pdf | Operating Voltage: 3.3 - 280V AC/DC | 0.489190 |

| 29 | 36.pdf | Operating Voltage: 3.3V DC | 0.507770 |

| 56 | 60.pdf | Operating Voltage: 80 Vp-p | 0.662016 |

| 27 | 34.pdf | Operating Voltage: 12 - 30 V DC | 0.725684 |

Let’s zoom in on one example where the LLM extraction received a low trustworthiness score.

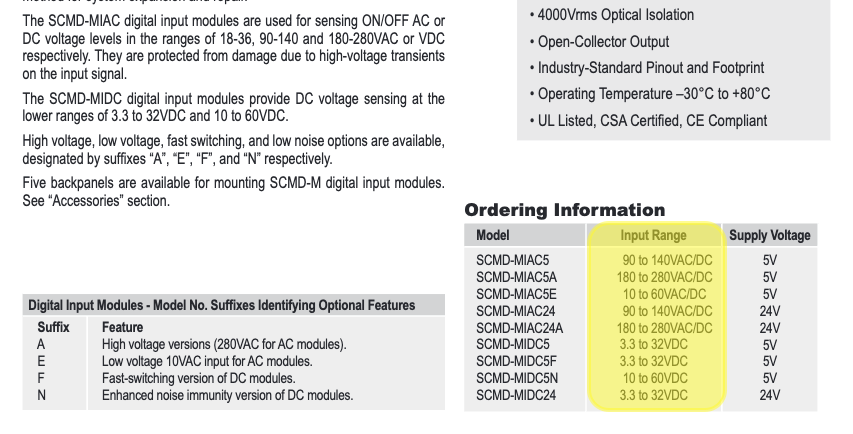

results_df.loc[results_df["filename"] == "56.pdf"]

| filename | response | trustworthiness_score | |

|---|---|---|---|

| 51 | 56.pdf | Operating Voltage: 3.3 - 280V AC/DC | 0.48919 |

The LLM extracted an operating voltage range of 3.3 - 280V AC/DC for datasheet 56.pdf, which received a low trustworthiness score. We depict part of this dataset below (you can find this information on Page 1 of 56.pdf). While the definition of the operating voltage is not explicitly stated, datasheet lists several different product models with different input voltage ranges. In this case, the LLM essentially combined the input ranges for all models, and hence returns the wrong response. At least you automatically get a low trustworthiness score for this response, allowing you to catch this incorrect LLM output.

Structured Outputs

Above, we extracted the operating voltage from datasheets using basic LLM calls. Let’s explore more advanced data extraction that enforces structured outputs from the LLM. With minimal code changes, you can extract multiple fields simultaneously and score the trustworthiness of each field in the structured output.

Let’s extract multiple fields in a specific format that we define in a structured output schema. Here we’ll extract:

- Operating voltage

- Whether the maximum dimension exceeds 100mm

First install and import necessary libraries.

%pip install openai pydantic

from pydantic import BaseModel, Field

import json

from typing import Literal

from openai import OpenAI

from cleanlab_tlm.utils.chat_completions import TLMChatCompletion

os.environ["OPENAI_API_KEY"] = "<OpenAI API key>" # Here we'll showcase using OpenAI to generate structured outputs, but you can use TLM for this instead too

Here, we utilize OpenAI’s Chat Completions API to extract structured data from the datasheet, and use TLM to score the trustworthiness of those extractions.

The decorator allows us to first use OpenAI to extract and parse the information, then use TLM to assess the reliability of each extracted field with minimal setup. For more information, see our TLM for Chat Completions tutorial.

If you don’t have an OpenAI account, you can use your TLM account to both generate the structured outputs and score their trustworthiness, as shown here.

import functools

def add_trust_scoring(tlm_instance):

"""Decorator factory that creates a trust scoring decorator."""

def trust_score_decorator(fn):

@functools.wraps(fn)

def wrapper(**kwargs):

response = fn(**kwargs)

score_result = tlm_instance.score(response=response, **kwargs)

response.tlm_metadata = score_result

return response

return wrapper

return trust_score_decorator

tlm = TLMChatCompletion(options={"log": ["per_field_score"]})

client = OpenAI()

client.chat.completions.parse = add_trust_scoring(tlm)(client.chat.completions.parse)

Extract Multiple Fields from Documents

Let’s create a prompt for structured extraction of multiple fields at once. Here we just show this for one example datasheet.

# Modify our existing prompt template from the tutorial

prompt_template = """Please reference the provided datasheet to determine the operating voltage of the item and whether any dimension exceeds 100mm.

For operating voltage:

Respond in the format: "[insert appropriate voltage range]V [AC/DC]" with the appropriate voltage range and indicating "AC" or "DC" if applicable, or omitting if not.

If the operating voltage is a range, write it as "A - B" with "-" between the values.

If the operating voltage information is not available, specify "N/A".

For maximum dimension:

Also determine if any dimension (length, width, or height) exceeds 100mm.

Choose 'Yes', 'No', or 'N/A' if dimensions are not specified.

Datasheet:

"""

# Sample datasheet text (first 1000 characters from the datasheet)

datasheet_text = """

0.5w Solar Panel 55*70

This is a custom solar panel, which mates directly with many of our development boards and has a high efficiency at 17%. Unit has a clear epoxy coating with hard-board backing. Robust sealing for out door applications!

Specification

PET

Package

Typical peak power

0.55W

Voltage at peak power

5.5w

Current at peak power

100mA

Length

70 mm

Width

55 mm

Depth

1.5 mm

Weight

17g

Efficiency

17%

Wire diameter

1.5mm

Connector

2.0mm JST

"""

# Build the full prompt

full_prompt = prompt_template + datasheet_text

We define a Pydantic schema to specify the extracted data format:

class DatasheetInfo(BaseModel):

operating_voltage: str = Field(

description="The operating voltage of the product in the format 'X - Y V [AC/DC]' or single value 'X V [AC/DC]'. Use 'N/A' if not available."

)

max_dimension_exceeds_100mm: Literal["Yes", "No", "N/A"] = Field(

description="Whether any dimension (length, width, or height) exceeds 100mm. Choose 'Yes', 'No', or 'N/A' if dimensions are not specified."

)

Now we use the OpenAI API to extract multiple fields at once. After you decorate OpenAI’s Chat Completions function, your existing code that uses the decorated function will automatically compute trust scores as well (zero change needed in other code).

completion = client.chat.completions.parse(

model="gpt-4.1-mini",

messages=[

{"role": "user", "content": full_prompt}

],

response_format=DatasheetInfo,

)

# Extract the structured information and trustworthiness score

extracted_info = completion.choices[0].message.parsed

trustworthiness_score = completion.tlm_metadata["trustworthiness_score"]

per_field_scores = completion.tlm_metadata["log"]["per_field_score"]

# Display the results

print(f"Extracted Information:")

print(f"Operating Voltage: {extracted_info.operating_voltage}")

print(f"Max Dimension Exceeds 100mm: {extracted_info.max_dimension_exceeds_100mm}")

print(f"Trustworthiness Score: {trustworthiness_score}")

print(f"\nPer-field trustworthiness scores:")

print(json.dumps(per_field_scores, indent=2))

The per_field_scores dictionary contains a granular confidence score and explanation for each extracted field. Since this dictionary can be overwhelming for larger schemas, we provide a get_untrustworthy_fields() method that:

- Prints detailed information about low-confidence fields

- Returns a list of fields that may need manual review due to low trust scores

untrustworthy_fields = tlm.get_untrustworthy_fields(tlm_result=completion)

This method returns a list of fields whose confidence score is low, allowing you to focus manual review on the specific fields whose extracted value is untrustworthy.

untrustworthy_fields

Next Steps

Don’t let unreliable LLM outputs block AI automation to extract information from documents at scale! With TLM, you can let LLMs automatically process the documents where they are trustworthy and automatically detect which remaining LLM outputs to manually review. This saves your team time and improves the accuracy of extracted information.

- Also check out our Structured Outputs and Data Annotation tutorials.

- To improve extraction accuracy, run TLM with a more powerful

modelandquality_presetconfiguration.