Scoring the Trustworthiness of ChatBot Responses over Multi-Turn Conversations

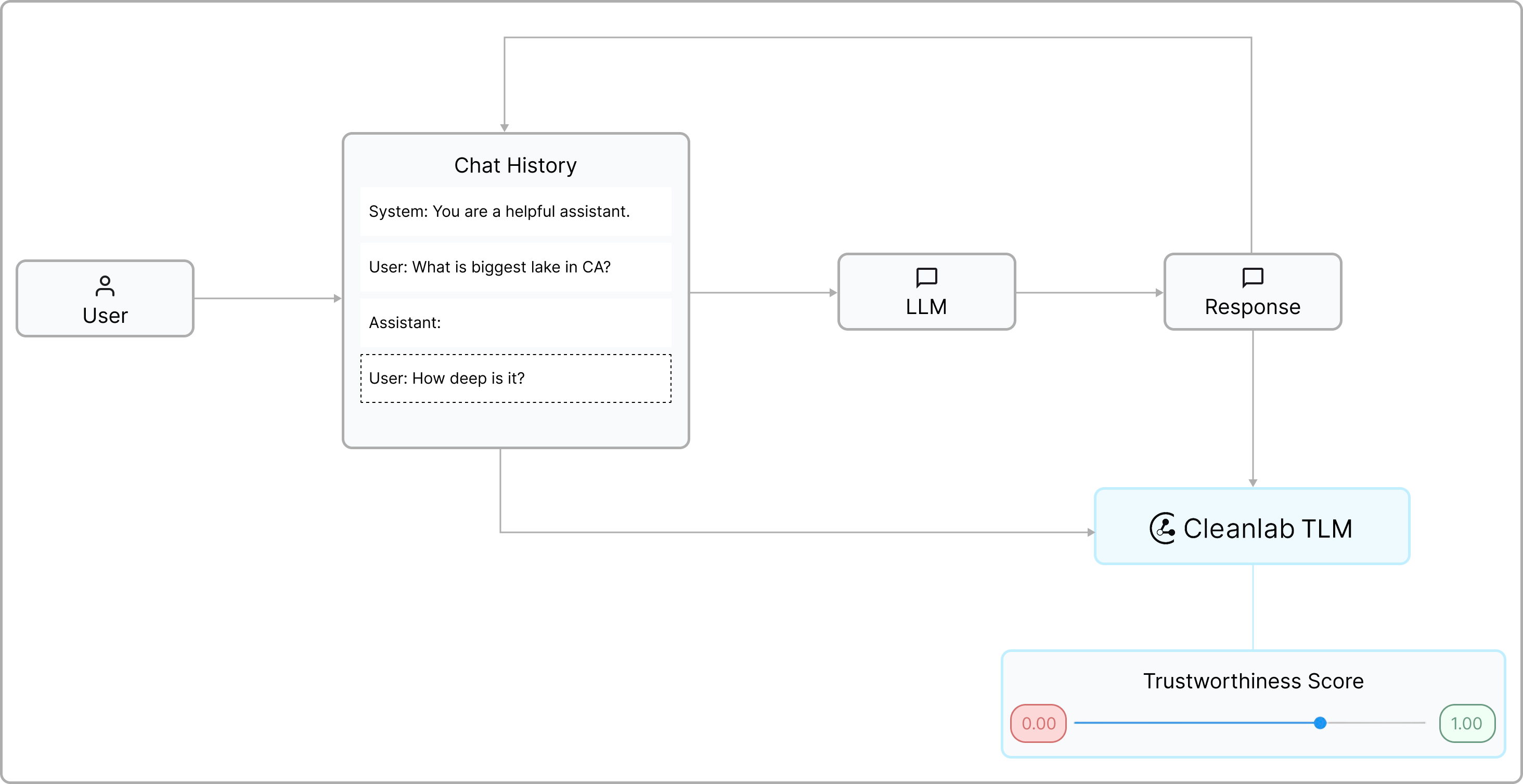

Conversational chatbots generate LLM responses based on a running dialogue history with the user. This tutorial demonstrates how to score the trustworthiness of chatbot responses in multi-turn conversational settings. Cleanlab works with any chatbot framework (e.g. Claude, Gemini, LlamaIndex), memory mechanism (e.g., message trimming, summary memory), and LLM model.

If you are using LangChain or any API compatible with OpenAI’s Chat Completions, we have dedicated tutorials you can consider first:

Setup

This tutorial requires a TLM API key. Get one here.

# Install required packages

%pip install -U openai cleanlab-tlm

# Set your API key

import os

os.environ["CLEANLAB_TLM_API_KEY"] = "<YOUR_CLEANLAB_API_KEY>" # Get your free API key from: https://tlm.cleanlab.ai/

# Import libraries

import openai

from cleanlab_tlm import TLM

from cleanlab_tlm.utils.chat import form_prompt_string

from typing import List, Dict

Multi-turn Conversations

Your chatbot system should already have logic to manage conversation history and generate LLM responses. For this tutorial, we standardize the format of chat_history to be a list of dictionaries, each with "role" and "content" keys — identical to OpenAI’s message format.

To follow this tutorial, your generate_llm_response() function should take in this chat_history and return a response from your LLM provider (e.g. LangChain, Claude, Gemini, etc.). Here we use OpenAI as an example LLM provider, but provide generate_llm_response() implementations for other LLM providers so you can easily swap in your own.

client = openai.OpenAI(api_key="<YOUR_OPENAI_API_KEY>")

def generate_llm_response(messages: List[Dict[str, str]]) -> str:

response = client.chat.completions.create(

model="gpt-4.1-nano",

messages=messages

)

return response.choices[0].message.content

Here we rely on a simple function to update chat_history, but you can customize it to control how history is managed (e.g. trimming older messages, summarizing earlier context, etc).

Optional: Define update_chat_history() method for managing chat history

def update_chat_history(chat_history: List[Dict[str, str]], role: str, content: str) -> List[Dict[str, str]]:

"""

Append a new message to the chat history.

This function updates the chat history by adding a new dictionary entry with

the specified role ('user', 'assistant', 'system') and content (message text).

Args:

chat_history (List[Dict[str, str]]): The current list of messages in the chat history.

role (str): The role of the message sender (e.g., 'user', 'assistant').

content (str): The textual content of the message.

Returns:

List[Dict[str, str]]: The updated chat history including the new message.

"""

chat_history.append({"role": role, "content": content})

return chat_history

Optional: generate_llm_response implementation for Claude

Remember to pip install anthropic

import anthropic

client = anthropic.Anthropic(api_key="<YOUR_CLAUDE_API_KEY>")

def generate_llm_response_claude(messages: List[Dict[str, str]]) -> str:

response = client.messages.create(

model="claude-3-haiku-20240307",

max_tokens=1000,

system=system_prompt,

messages=messages[1:] # system prompt passed separately

)

return response.content[0].text

Optional: generate_llm_response implementation for Gemini

Remember to pip install google-genai

import google.generativeai as genai

client = genai.Client(api_key="<YOUR_GEMINI_API_KEY>")

def generate_llm_response_gemini(messages: List[Dict[str, str]]) -> str:

config = types.GenerateContentConfig(system_instruction=system_prompt)

messages = messages[1:] # system prompt passed separately

gemini_messages = [

{

"role": "model" if msg["role"] == "assistant" else "user",

"parts": [{"text": msg["content"]}]

}

for msg in messages

]

response = client.models.generate_content(

model="gemini-1.5-flash-8b",

contents=gemini_messages,

config=config

)

return response.text

Optional: generate_llm_response implementation for LlamaIndex

Remember to pip install llama-index

from llama_index.llms.openai import OpenAI # or any other llama-index LLM wrapper

from llama_index.core.base.llms.types import ChatMessage, MessageRole

llm = OpenAI(

model="gpt-4.1-nano",

api_key="<YOUR_OPENAI_API_KEY>"

)

def generate_llm_response_llama(messages: List[Dict[str, str]]) -> str:

role_map = {

"system": MessageRole.SYSTEM,

"user": MessageRole.USER,

"assistant": MessageRole.ASSISTANT,

}

chat_msgs = [

ChatMessage(role=role_map[m["role"]], content=m["content"])

for m in messages

]

response = llm.chat(chat_msgs)

return response.message.content.strip()

Optional: generate_llm_response implementation for LiteLLM

Remember to pip install litellm

import litellm

litellm.api_key = "<YOUR_OPENAI_API_KEY>"

def generate_llm_response_litellm(messages: List[Dict[str, str]]) -> str:

response = litellm.completion(

model="openai/gpt-4o-mini",

messages=messages,

max_tokens=1000,

)

return response["choices"][0]["message"]["content"]

Example Use Case: Customer Support Chatbot

Let’s consider a customer support chatbot use case. Replace our example system prompt and conversations with your own, and Cleanlab will evaluate the trustworthiness of each response in real-time based on the full conversation history and system instructions.

Optional: Define system_prompt for our customer support chatbot

system_prompt = """The following is the customer service policy of ACME Inc.

# ACME Inc. Customer Service Policy

## Table of Contents

1. Free Shipping Policy

2. Free Returns Policy

3. Fraud Detection Guidelines

4. Customer Interaction Tone

## 1. Free Shipping Policy

### 1.1 Eligibility Criteria

- Free shipping is available on all orders over $50 within the continental United States.

- For orders under $50, a flat rate shipping fee of $5.99 will be applied.

- Free shipping is not available for expedited shipping methods (e.g., overnight or 2-day shipping).

### 1.2 Exclusions

- Free shipping does not apply to orders shipped to Alaska, Hawaii, or international destinations.

- Oversized or heavy items may incur additional shipping charges, which will be clearly communicated to the customer before purchase.

### 1.3 Handling Customer Inquiries

- If a customer inquires about free shipping eligibility, verify the order total and shipping destination.

- Inform customers of ways to qualify for free shipping (e.g., adding items to reach the $50 threshold).

- For orders just below the threshold, you may offer a one-time courtesy free shipping if it's the customer's first purchase or if they have a history of large orders.

### 1.4 Processing & Delivery Timeframes

- Standard orders are processed within 1 business day; during peak periods (e.g., holidays) allow up to 3 business days.

- Delivery via ground service typically takes 3-7 business days depending on destination.

### 1.5 Shipment Tracking & Notifications

- A tracking link must be emailed automatically once the carrier scans the package.

- Agents may resend tracking links on request and walk customers through carrier websites if needed.

### 1.6 Lost-Package Resolution

1. File a tracer with the carrier if a package shows no movement for 7 calendar days.

2. Offer either a replacement shipment or a full refund once the carrier confirms loss.

3. Document the outcome in the order record for analytics.

### 1.7 Sustainability & Packaging Standards

- Use recyclable or recycled-content packaging whenever available.

- Consolidate items into a single box to minimize waste unless it risks damage.

## 2. Free Returns Policy

### 2.1 Eligibility Criteria

- Free returns are available for all items within 30 days of the delivery date.

- Items must be unused, unworn, and in their original packaging with all tags attached.

- Free returns are limited to standard shipping methods within the continental United States.

### 2.2 Exclusions

- Final sale items, as marked on the product page, are not eligible for free returns.

- Customized or personalized items are not eligible for free returns unless there is a manufacturing defect.

- Undergarments, swimwear, and earrings are not eligible for free returns due to hygiene reasons.

### 2.3 Process for Handling Returns

1. Verify the order date and ensure it falls within the 30-day return window.

2. Ask the customer about the reason for the return and document it in the system.

3. Provide the customer with a prepaid return label if they qualify for free returns.

4. Inform the customer of the expected refund processing time (5-7 business days after receiving the return).

### 2.4 Exceptions

- For items damaged during shipping or with manufacturing defects, offer an immediate replacement or refund without requiring a return.

- For returns outside the 30-day window, use discretion based on the customer's history and the reason for the late return. You may offer store credit as a compromise.

### 2.5 Return Package Preparation Guidelines

- Instruct customers to reuse the original box when possible and to cushion fragile items.

- Advise removing or obscuring any prior shipping labels.

### 2.6 Inspection & Restocking Procedures

- Returns are inspected within 48 hours of arrival.

- Items passing inspection are restocked; those failing inspection follow the disposal flow in § 2.8.

### 2.7 Refund & Exchange Timeframes

- Refunds to the original payment method post within 5-7 business days after inspection.

- Exchanges ship out within 1 business day of successful inspection.

### 2.8 Disposal of Non-Restockable Goods

- Defective items are sent to certified recyclers; lightly used goods may be donated to charities approved by the CSR team.

## 3. Fraud Detection Guidelines

### 3.1 Red Flags for Potential Fraud

- Multiple orders from the same IP address with different customer names or shipping addresses.

- Orders with unusually high quantities of the same item.

- Shipping address different from the billing address, especially if in different countries.

- Multiple failed payment attempts followed by a successful one.

- Customers pressuring for immediate shipping or threatening to cancel the order.

### 3.2 Verification Process

1. For orders flagging as potentially fraudulent, place them on hold for review.

2. Verify the customer's identity by calling the phone number on file.

3. Request additional documentation (e.g., photo ID, credit card statement) if necessary.

4. Cross-reference the shipping address with known fraud databases.

### 3.3 Actions for Confirmed Fraud

- Cancel the order immediately and refund any charges.

- Document the incident in the customer's account and flag it for future reference.

- Report confirmed fraud cases to the appropriate authorities and credit card companies.

### 3.4 False Positives

- If a legitimate customer is flagged, apologize for the inconvenience and offer a small discount or free shipping on their next order.

- Document the incident to improve our fraud detection algorithms.

### 3.5 Chargeback Response Procedure

1. Gather all order evidence (invoice, shipment tracking, customer communications).

2. Submit documentation to the processor within 3 calendar days of chargeback notice.

3. Follow up weekly until the dispute is closed.

### 3.6 Data Security & Privacy Compliance

- Store verification documents in an encrypted, access-controlled folder.

- Purge personally identifiable information after 180 days unless required for ongoing legal action.

### 3.7 Continuous Improvement & Training

- Run quarterly reviews of fraud rules with data analytics.

- Provide annual anti-fraud training to all front-line staff.

### 3.8 Record-Keeping Requirements

- Maintain a log of all fraud reviews—including false positives—for 3 years to support audits.

## 4. Customer Interaction Tone

### 4.1 General Guidelines

- Always maintain a professional, friendly, and empathetic tone.

- Use the customer's name when addressing them.

- Listen actively and paraphrase the customer's concerns to ensure understanding.

- Avoid negative language; focus on what can be done rather than what can't.

### 4.2 Specific Scenarios

#### Angry or Frustrated Customers

- Remain calm and do not take comments personally.

- Acknowledge the customer's feelings and apologize for their negative experience.

- Focus on finding a solution and clearly explain the steps you'll take to resolve the issue.

- If necessary, offer to escalate the issue to a supervisor.

#### Confused or Indecisive Customers

- Be patient and offer clear, concise explanations.

- Ask probing questions to better understand their needs.

- Provide options and explain the pros and cons of each.

- Offer to send follow-up information via email if the customer needs time to decide.

#### VIP or Loyal Customers

- Acknowledge their status and thank them for their continued business.

- Be familiar with their purchase history and preferences.

- Offer exclusive deals or early access to new products when appropriate.

- Go above and beyond to exceed their expectations.

### 4.3 Language and Phrasing

- Use positive language: "I'd be happy to help you with that" instead of "I can't do that."

- Avoid technical jargon or abbreviations that customers may not understand.

- Use "we" statements to show unity with the company: "We value your feedback" instead of "The company values your feedback."

- End conversations on a positive note: "Is there anything else I can assist you with today?"

### 4.4 Written Communication

- Use proper grammar, spelling, and punctuation in all written communications.

- Keep emails and chat responses concise and to the point.

- Use bullet points or numbered lists for clarity when providing multiple pieces of information.

- Include a clear call-to-action or next steps at the end of each communication.

### 4.5 Response-Time Targets

- Live chat: respond within 30 seconds.

- Email: first reply within 4 business hours (max 24 hours during peak).

- Social media mentions: acknowledge within 1 hour during staffed hours.

### 4.6 Accessibility & Inclusivity

- Offer alternate text for images and use plain-language summaries.

- Provide TTY phone support and ensure web chat is screen-reader compatible.

### 4.7 Multichannel Etiquette (Phone, Chat, Social)

- Use consistent greetings and closings across channels.

- Avoid emojis in formal email; limited, brand-approved emojis allowed in chat or social when matching customer tone.

### 4.8 Proactive Outreach & Follow-Up

- After resolving a complex issue, send a 24-hour satisfaction check-in.

- Tag VIP accounts for quarterly “thank-you” notes highlighting new offerings.

### 4.9 Documentation of Customer Interactions

- Log every interaction in the CRM within 15 minutes of completion, including sentiment and resolution code.

- Use standardized tags to support trend analysis and training.

You are a customer service assistant for ACME Inc. Answer customer questions accurately, concisely, and in line with the company's service policy. Do not suggest contacting customer service.

Remember, as a representative of ACME Inc., you are often the first point of contact for our customers. Your interactions should always reflect our commitment to exceptional customer service and satisfaction.

"""

chat_history = [{"role": "system", "content": system_prompt}]

Scoring trustworthiness of LLM responses

To score the trustworthiness of each LLM response in the chat, let’s instantiate a TLM client and log TLM explanations to understand why certain LLM responses were considered untrustworthy.

tlm = TLM(options={'log':['explanation']}) # See Advanced Tutorial for optional TLM configurations to get better/faster results

Optional: Define display_tlm_feedback() helper function to print TLM results

import textwrap

def display_tlm_feedback(response_text: str, resp: dict, threshold: float = 0.8, explanation: bool = True):

print("=" * 30 + " AI Message " + "=" * 30)

# Wrap and print the AI response across multiple lines

wrapped_response = textwrap.fill(response_text.strip(), width=100)

print(wrapped_response + "\n")

score = resp.get("trustworthiness_score")

if score is None:

print("[TLM Error]: No trustworthiness score found in response.")

return

label = "Trustworthy" if score >= threshold else "Untrustworthy"

print(f"[TLM Score]: {score} ({label})")

if explanation:

explanation_text = resp.get("log", {}).get("explanation")

if explanation_text:

print(f"[TLM Score Explanation]: {explanation_text}")

else:

print("[TLM Warning]: Enable the 'explanation' option in the TLM client to receive detailed reasoning.")

TLM.get_trustworthiness() requires two parameters: prompt and response. For a multi-turn chat: the prompt should include the conversation history up to (but not including) the last assistant response, plus any system instructions or retrieved context that was also provided to your LLM when it generated a response.

Simply convert your chat history into a prompt string using TLM’s form_prompt_string() method.

Let’s run our chatbot with trustworthiness scoring.

user_input = "Can I return my jeans even if I've worn them?"

# Your existing LLM chat code

chat_history = update_chat_history(chat_history, "user", user_input)

response = generate_llm_response(chat_history)

# Extra code for trustworthiness scoring

tlm_results = tlm.get_trustworthiness_score(

prompt = form_prompt_string(chat_history), response=response

)

# Your existing LLM chat code

chat_history = update_chat_history(chat_history, "assistant", response)

# Code just for this tutorial, to display results

display_tlm_feedback(response_text=response, resp=tlm_results, threshold=0.85, explanation=True)

Reviewing the chatbot response, we find that it clearly and accurately follows the refund policy. The trustworthiness score automatically confirmed this in real time. Next, we’ll evaluate the chatbot’s response to a follow-up question — one that depends on the context established in the first exchange.

user_input = "I've only worn it once. Can I just pay the shipping fee?"

# Your existing LLM chat code

chat_history = update_chat_history(chat_history, "user", user_input)

response = generate_llm_response(chat_history)

# Extra code for trustworthiness scoring

tlm_results = tlm.get_trustworthiness_score(

prompt = form_prompt_string(chat_history), response=response

)

# Your existing LLM chat code

chat_history = update_chat_history(chat_history, "assistant", response)

# Code just for this tutorial, to display results

display_tlm_feedback(response_text=response, resp=tlm_results, threshold=0.85, explanation=True)

The chatbot successfully relied on the earlier conversation history to answer. However, TLM assigned the response a low trustworthiness score.

Referring to the policy in our LLM’s system prompt:

Free returns are available for all items within 30 days of the delivery date.

We see the policy does not clarify whether items can still be returned if the customer were to cover return shipping, leading to ambiguity regarding what is a correct response. TLM automatically detected this issue in real-time.

Handling open-ended / non-propositional responses and other custom evaluations (click to expand)

You can also specify custom TLM evaluations to assess specific criteria. For example, you may not want to rely on TLM’s trustworthiness score for every chatbot response, but rather only for verifiable statements that convey information (these are called propositional responses). You can run TLM with custom evaluation criteria that scores how non-propositional each response is, and only consider TLM’s trustworthiness score for those responses whose custom evaluation score is low.

Here’s an example implmentation of this strategy:

# Run TLM with custom eval assessing how non-propositional each response is

custom_eval_criteria_option = {"custom_eval_criteria": [

{"name": "Non-Propositionality", "criteria": "Determine whether the response is non-propositional, in which case it is great. Otherwise it is a bad response if it conveys any specific information or facts, or otherwise seems like an answer whose accuracy could matter."}

]}

tlm_custom_eval = TLM(options=custom_eval_criteria_option)

# Helper method to handle propostional responses

def update_trust_score(tlm_results, custom_score_threshold=0.8, high_trust_score = 0.99):

"""

Assumes `tlm_results` are the output of TLM run with a custom evaluation criteria.

Whenever the custom evaluation score is higher than `custom_score_threshold`,

this method overrides the TLM trustworthiness score to be high.

Returns: updated `tlm_results` (this object is mutated in place).

"""

if not 'log' in tlm_results:

raise ValueError("tlm_results must be output of TLM run with a custom evaluation criteria")

if not 'custom_eval_criteria' in tlm_results['log']:

raise ValueError("tlm_results must be output of TLM run with a custom evaluation criteria")

if len(tlm_results['log']['custom_eval_criteria']) != 1:

raise ValueError("tlm_results must be output of TLM run with a single custom evaluation criteria")

if not 'score' in tlm_results["log"]["custom_eval_criteria"][0]:

raise ValueError("custom evaluation criteria score is missing in tlm_results")

custom_score = tlm_results["log"]["custom_eval_criteria"][0]["score"]

if custom_score > custom_score_threshold:

tlm_results["trustworthiness_score"] = high_trust_score

Here’s how to run this implementation on an open-ended LLM response whose trustworthiness doesn’t really matter:

user_input = "Hi!"

# Your existing LLM chat code

chat_history = update_chat_history(chat_history, "user", user_input)

response = generate_llm_response(chat_history)

# Extra code for trustworthiness scoring

tlm_results = tlm_custom_eval.get_trustworthiness_score(

prompt = form_prompt_string(chat_history), response=response

)

update_trust_score(tlm_results) # possibly override trust scores

# Your existing LLM chat code

chat_history = update_chat_history(chat_history, "assistant", response)

# Code just for this tutorial, to display results

display_tlm_feedback(response_text=response, resp=tlm_results, threshold=0.85, explanation=False)

This tutorial relied on form_prompt_string(messages) to convert chat messages into a string prompt for TLM.

Alternatively, you can apply TLM directly to messages in OpenAI’s Chat Completions format as shown here: TLM for Chat Completions API