RAG with Tool Calls in smolagents

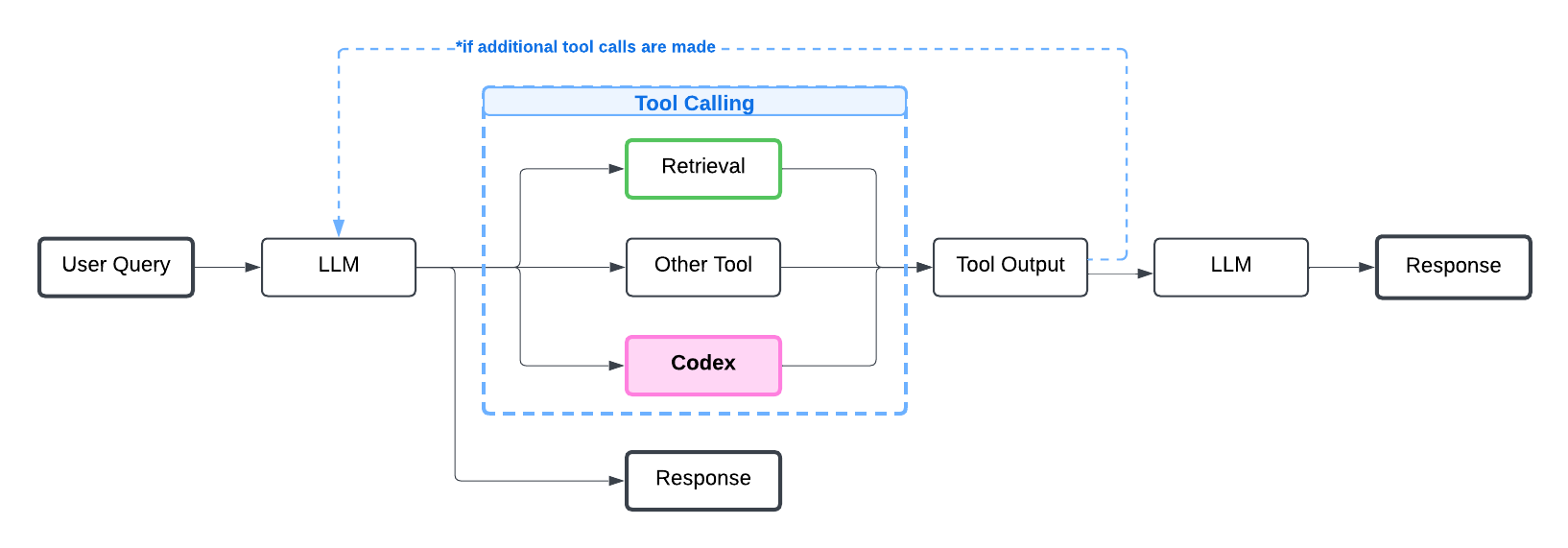

This tutorial demonstrates how to build an smolagents agent that can call tools and answer user questions based on a Knowledge Base of files you provide. By implementing retrieval as a tool that can be called by the Agent, this approach differs from standard RAG where retrieval is typically hardcoded as a step in every user interaction.

Building such an AI Agent is a prerequisite for our tutorial: Integrate Codex as-a-tool with smolagents, which shows how to greatly improve any existing Agent.

The code provided in this notebook is for an Agentic RAG application, with single-turn conversations.

Let’s first install packages required for this tutorial. Most of these packages are the same as those used in smolagents’ RAG tutorial.

%pip install smolagents pandas langchain langchain-community rank_bm25 --upgrade -q

%pip install litellm # Optional dependency of smolagents to use LiteLLM as a gateway to many LLMs,

import os

os.environ["OPENAI_API_KEY"] = "<YOUR-KEY-HERE>" # Replace with your OpenAI API key

Example Agent: Product Customer Support

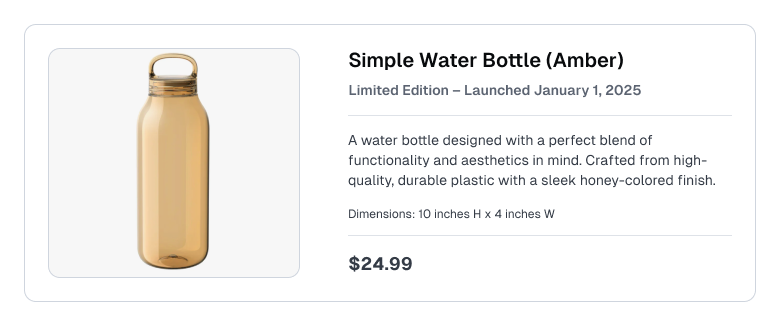

Consider a customer support / e-commerce RAG use-case where the Knowledge Base contains product listings like the following:

To keep our example minimal, our Agent’s Knowledge Base here only contains a single document featuring this one product description. The document is split into smaller chunks to enable more precise retrieval.

The processed documents get passed to a retriever tool that we define. It uses BM25, a simple but effective text retrieval algorithm, which returns relevant text chunks based on keyword matching when the tool is invoked.

Optional: Define helper methods for Knowledge Base creation and retreival

Create agent that supports Tool Calls

We instantiate smolagents’s CodeAgent class, which uses the underlying LLM to formulate tool calls in code format, then parse them and execute them. As this class is optimized for single-turn interactions, we’ll focus on those use cases here.

Example tool: get_todays_date

Let’s define an example tool get_todays_date() that our Agent can rely on. We use the @tool decorator from the smolagents library to define the tool, ready for use in the Agent.

from datetime import datetime

from smolagents import tool

@tool

def get_todays_date(date_format: str) -> str:

"""A tool that returns today's date in the date format requested. Use this tool when knowing today's date is necessary to answer the question, like checking if something is available now or calculating how long until a future or past date.

Args:

date_format: The date format to return today's date in. Options are: '%Y-%m-%d', '%d', '%m', '%Y'. The default is '%Y-%m-%d'.

Returns:

str: Today's date in the requested format.

"""

datetime_str = datetime.now().strftime(date_format)

return datetime_str

Update our tools with tool call instructions

For the best performance with smolagents’ coding Agent, add instructions on when to use the tool into the tool description itself. The smolagents framework will ensure these instructions are included in the system prompt that governs your LLM.

Initialize AI Agent

Let’s initialize an LLM with tool-calling capabilities, as well as the retriever tool, then build the agent. We add the get_todays_date tool into the Agent as well.

Optional: Code to create the AI Agent

RAG in action

Let’s ask our Agent common questions from users about the Simple Water Bottle in our example.

Scenario 1: RAG Agent can answer the question using its Knowledge Base

user_question = "How big is the water bottle?"

response = agent.run(user_question)

print(response)

Here the Agent was able to provide a good answer because its Knowledge Base contains the necessary information.

Scenario 2: RAG Agent can answer the question using other tools

user_question = "Check today's date. Has the limited edition Amber water bottle already launched?"

response = agent.run(user_question)

print(response)

In this case, the Agent chose to call our get_todays_date tool to obtain information necessary for properly answering the user’s query. Note that a proper answer to this question also requires considering information from the Knowledge Base as well.

Scenario 3: RAG Agent can’t answer the question

user_question = "Can I return my simple water bottle?"

response = agent.run(user_question)

print(response)

This Agent’s Knowledge Base does not contain information about the return policy, and the get_todays_date tool would not help here either. In this case, the best our Agent can do is to return our fallback response to the user.

Next steps

Once you have an Agent that can call tools, adding Codex as a Tool takes only a few lines of code. Codex enables your AI Agent to answer questions it previously could not (like Scenario 3 above). Learn how via our tutorial: Integrate Codex-as-a-tool with smolagents

Need help? Check the FAQ or email us at: support@cleanlab.ai