Detect and remediate bad responses from conversational RAG applications

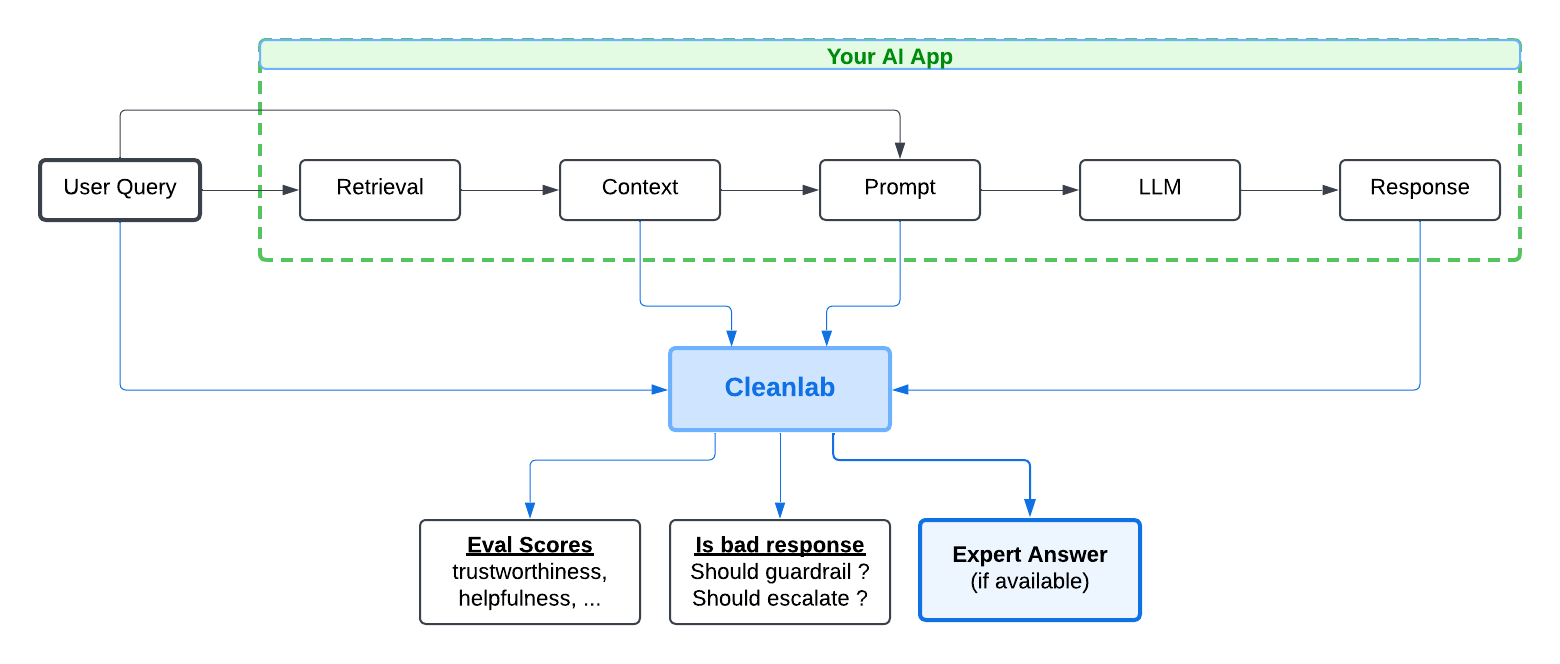

This tutorial demonstrates how to automatically improve any multi-turn chat RAG application by integrating the Cleanlab AI Platform. The Cleanlab API takes in the AI-generated response from your RAG app, and the same inputs provided to the LLM that generated it: user query, retrieved context, and any parts of the LLM prompt (including the chat history). Cleanlab will automatically detect if your AI response is bad (e.g., untrustworthy, unhelpful, or unsafe). The Cleanlab API returns these real-time evaluation scores which you can use to guardrail your AI. If your AI response is flagged as bad, the Cleanlab API will also return an expert response whenever a similar query has been answered in the connected Cleanlab Project, or otherwise log this query into the Cleanlab Project for SMEs to answer.

Overview

Here’s all the code needed for using Cleanlab with your RAG system:

from cleanlab_codex import Project

project = Project.from_access_key(access_key)

# Your existing RAG code:

context = rag_retrieve_context(user_query)

messages = rag_form_prompt(user_query, context, conversation_history)

response = rag_generate_response(messages)

# Detect bad responses and remediate with Cleanlab

results = project.validate(messages=messages, query=user_query, context=context, response=response)

final_response = (

results.expert_answer if results.expert_answer # and results.escalated_to_sme (Note: uncomment this to utilize Codex expert-answers solely as a backup)

else fallback_response if results.should_guardrail

else response

)

# Update the conversation history

conversation_history.append({"role": "user", "content": user_query})

conversation_history.append({"role": "assistant", "content": final_response})

Note: This tutorial is for Multi-turn Chat Apps. If you have a Single-turn Q&A app, a similar workflow is covered in the Detect and Remediate bad responses in Single-turn Q&A Apps tutorial.

Setup

This tutorial requires a Cleanlab API key. Get one here.

%pip install --upgrade cleanlab-codex pandas

# Set your Codex API key

import os

os.environ["CODEX_API_KEY"] = "<API key>" # Get your free API key from: https://codex.cleanlab.ai/account

# Import libraries

import pandas as pd

from cleanlab_codex import Project

Example RAG App: Product Customer Support

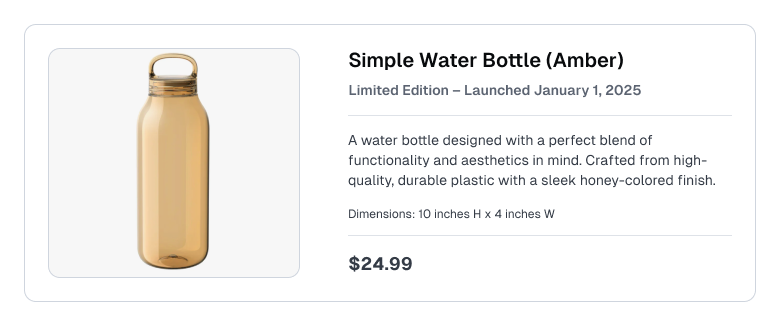

Consider a customer support / e-commerce RAG use-case where the Knowledge Base contains product listings like the following:

Here, the inner workings of the RAG app are not important for this tutorial. What is important is that the RAG app generates a response based on a user query, a context, and a prior conversation history, which are all made available for evaluation.

For simplicity, our context is hardcoded as the product listing below. Similarly, the current step of the conversation history is hardcoded as well. You should replace these with the outputs of your RAG system, noting that Cleanlab can detect issues in these outputs in real-time.

product_listing = """Simple Water Bottle - Amber (limited edition launched Jan 1st 2025)

A water bottle designed with a perfect blend of functionality and aesthetics in mind. Crafted from high-quality, durable plastic with a sleek honey-colored finish.

Price: $24.99

Dimensions: 10 inches height x 4 inches width"""

Optional: Example queries, retrieved context + generated response from RAG system dataframe

data = [

{

"query": "How much does the Simple Water Bottle cost?",

"context": product_listing,

"response": "The Simple Water Bottle costs $24.99.",

},

{

"query": "Can I ask a question about the Simple Water Bottle?",

"context": product_listing,

"response": "Sure! What would you like to know?",

},

{

"query": "How much water can it hold?",

"context": product_listing,

"response": "The Simple Water Bottle can hold 16 oz of Water",

},

{

"query": "Can I bulk order the bottles?",

"context": product_listing,

"response": "I am sorry, I do not have information about bulk orders for the Simple Water Bottle.",

},

]

df = pd.DataFrame(data)

df

In practice, your RAG system should already have functions to retrieve context, generate responses, and build a messages object to prompt the LLM with.

For this tutorial, we’ll simulate these functions using the above fields as well as define a simple fallback_response, system_prompt, and prompt_template.

Optional: Toy RAG methods you should replace with existing methods from your RAG system

fallback_response = "I'm sorry, I couldn't find an answer for that — can I help with something else?"

system_prompt = "You are a customer service agent. Be polite and concise in your responses."

prompt_template = """Answer the following customer question based on the product listing.

Customer Question: {query}

"""

def rag_retrieve_context(query):

"""Simulate retrieval from a knowledge base"""

# In a real system, this would search the knowledge base

for item in data:

if item["query"] == query:

return item["context"]

return ""

def rag_generate_response(messages):

"""Simulate LLM response generation"""

# In a real system, this would call an LLM

for item in data:

if item["query"] in messages[-1]["content"]:

return item["response"]

# Return a fallback response if the LLM is unable to answer the question

return fallback_response

def rag_form_prompt(query, context, conversation_history):

"""Form a prompt for your LLM response-generation step (from the user query, retrieved context, conversation history, system instructions, etc). We represent the `prompt` in OpenAI's `messages` format, which matches the input to Cleanlab's `validate()` method.

**Note:** In `messages`, it is recommended to inject retrieved context into the system prompt rather than each user message.

"""

system_prompt_with_context = {"role": "system", "content": system_prompt + f"\n\nContext\n{context}\n"}

user_query_prompt = {"role": "user", "content": prompt_template.format(query=query)}

messages = [system_prompt_with_context] + conversation_history + [user_query_prompt]

return messages

Create Cleanlab Project

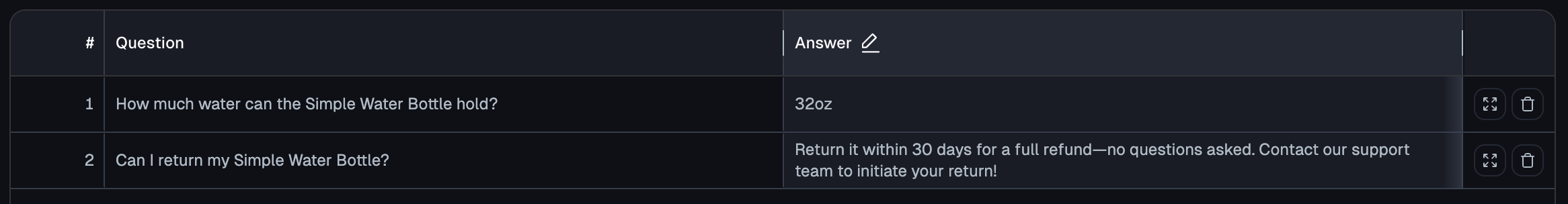

To later use the Cleanlab AI Platform, we must first create a Project. Here we assume some (question, answer) pairs have already been added to the Cleanlab Project.

Our existing Cleanlab Project contains the following entries:

User queries where Cleanlab detected a bad response from your RAG app will be logged in this Project for SMEs to later answer.

Running detection and remediation

Now that our Cleanlab Project is configured, we can use the Project.validate() method to detect bad responses from our RAG application. A single call runs many real-time Evals to score each AI response, and when scores fall below certain thresholds, the response is flagged for guardrailing or for SME review.

When your AI response is flagged for SME review, the Project.validate() call will simultaneously query Cleanlab for an expert answer that can remediate your bad AI response. If no suitable expert answer is found, this query will be logged as Unaddressed in the Cleanlab Project for SMEs to answer

When a response is flagged for guardrailing, the should_guardrail return value will be marked as True. You can choose to return a safer fallback response in place of the original AI response, or escalate to a human employee rather than letting your AI handle this case.

Here’s some logic to determine the final_response to return to your user.

final_response = (

results.expert_answer if results.expert_answer. # and results.escalated_to_sme (Note: uncomment this to utilize Cleanlab expert-answers solely as a backup)

else fallback_response if results.should_guardrail

else initial_response

)

Let’s initialize the Project using our access key:

access_key = "<YOUR-PROJECT-ACCESS-KEY>" # Obtain from your Project's settings page: https://codex.cleanlab.ai/

project = Project.from_access_key(access_key)

Applying the Project.validate() method to a RAG system is straightfoward. Here we do this using a mock RAG chat application and a helper function that validates a single chat turn.

Optional: Toy RAG.chat() method for a single turn of a conversation.

def rag_chat(query, conversation_history):

"""Ask a question to the RAG pipeline and return the response."""

# Add user query to the conversation history

updated_conversation_history = conversation_history + [{"role": "user", "content": query}]

context = rag_retrieve_context(query)

messages = rag_form_prompt(

query=query,

context=context,

conversation_history=updated_conversation_history,

)

response = rag_generate_response(messages)

# Add response to the updated conversation history

updated_conversation_history.append({"role": "assistant", "content": response})

rag_response = {

"query": query,

"context": context,

"response": response,

"messages": messages,

"conversation_history": updated_conversation_history,

}

return rag_response

def run_validation(user_query, conversation_history, project, verbosity=0):

"""

Detect and remediate bad responses in a chat turn of a Conversational RAG system.

Args:

user_query (str): The user query to validate.

conversation_history (list): The conversation history to validate.

project (Project): The Codex Project object used to detect bad responses and remediate them. verbosity (int): Whether to print verbose output. Defaults to 0.

At verbosity level 0, only the query and final response are printed.

At verbosity level 1, the initial RAG response and the validation results are printed as well.

At verbosity level 2, the retrieved context is also printed.

Returns:

list: Updated conversation history with the final response.

"""

print(f"User Query: {user_query}\n")

# 1. Run standard RAG pipeline

rag_response = rag_chat(user_query, conversation_history)

if verbosity >= 2:

print(f"Retrieved context:\n{rag_response['context']}\n")

if verbosity >= 1:

print(f"Initial RAG response: {rag_response['response']}\n")

# 3. Detect and remediate bad responses

results = project.validate(

messages=rag_response["messages"],

response=rag_response["response"],

query=user_query,

context=rag_response["context"],

)

# 4. Get the final response:

# - Use the fallback_response if the response was flagged as requiring guardrails

# - Use an expert answer if available

# - Otherwise, use the initial response

final_response = (

results.expert_answer if results.expert_answer # and results.escalated_to_sme (Note: uncomment this to utilize Cleanlab expert-answers solely as a backup)

else fallback_response if results.should_guardrail

else rag_response["response"] # initial response from the RAG system

)

print(f"Final Response: {final_response}\n")

# 5. Update the conversation history with the final response (instead of the initial response)

updated_conversation_history = rag_response["conversation_history"]

updated_conversation_history[-1] = {"role": "assistant", "content": final_response}

# For tutorial purposes, show validation results and conversation history

if verbosity >= 2:

print("Conversation History:")

for message in rag_response["conversation_history"]:

print(f" {message['role'].capitalize()}: {message['content']}")

print()

if verbosity >= 1:

print("Validation Results:")

for key, value in results.model_dump().items():

print(f" {key}: {value}")

print()

return updated_conversation_history

Let’s validate the RAG response to our first example conversation. Notice the conversational opening statement and the LLM response to it was not escalated to the SME or guardrailed and the “Final Response” stayed the same as the “Initial RAG response”.

conversation_history = []

query = "Can I ask a question about the Simple Water Bottle?"

conversation_history = run_validation(query, conversation_history, project, verbosity=1)

Lets ask a followup question and further break down the returned keys below.

query = "How much does the Simple Water Bottle cost?"

conversation_history = run_validation(query, conversation_history, project, verbosity=2)

The Project.validate() method returns a comprehensive dictionary containing multiple evaluation metrics and remediation options. Let’s examine the key components of these results:

Core Validation Results

-

expert_answer(String | None)- Contains the remediation response retrieved from the Codex Project.

- Returns

Nonein one scenario:- When no suitable expert answer exists in the Cleanlab Project for similar queries.

- Returns a string containing the expert-provided answer when:

- A semantically similar query exists in the Cleanlab Project with an expert answer.

-

escalated_to_sme(Boolean)- Will be

Trueif any eval fails withshould_escalate=True, meaning the score for that specific eval falls below a configured threshold.

- Will be

-

should_guardrail(Boolean)- Will be

Truewhen any configured guardrails are triggered withshould_guardrail=True, meaning the score for that specific guardrail falls below a configured threshold. - Does not trigger checking Cleanlab for an expert answer and flagging the query for review.

- Will be

Evaluation Metrics

Each evaluation metric has a triggered_guardrail and triggered_escalation boolean flag that indicates whether the metric’s score falls below its configured threshold, which determines if a response needs remediation or guardrailing.

By default, the Project.validate() method uses the following metrics as Evaluations for escalation:

trustworthiness: overall confidence that your RAG system’s response is correct.response_helpfulness: evaluates whether the response attempts to helpfully address the user query vs. abstaining or saying ‘I don’t know’.

By default, the Project.validate() method uses the following metrics as Guardrails:

trustworthiness: overall confidence that your RAG system’s response is correct (used for guardrailing and escalation).

You can modify these metrics or add your own by defining a custom list of Evaluations and/or Guardrails for a Project in the Codex Web App.

Validating an unhelpful response

Let’s continue our conversation and validate another example from our RAG system.

The response is flagged as bad, but no expert answer is available in the Cleanlab Project. The corresponding query will be logged there for SMEs to answer.

query = "Can I bulk order the bottles?"

conversation_history = run_validation(query, conversation_history, project, verbosity=2)

The RAG system is unable to answer this question because there is no relevant information in the retrieved context, nor has a similar question been answered in the Cleanlab Project (see the contents of the Cleanlab Project above).

Cleanlab automatically recognizes this question could not be answered and logs it into the Project where it awaits an answer from a SME (notice that escalated_to_sme is True).

Navigate to your Cleanlab Project in the Web App where you (or a SME at your company) can enter the desired answer for this query.

As soon as an answer is provided in Cleanlab, our RAG system will be able to answer all similar questions going forward (as seen for the previous query).

Validating an incorrect response where the expert answer is in Cleanlab

Let’s restart our conversation and validate another example from our RAG system. For this example, initial RAG response is a hallucination as the volume of the water bottle is not in the Retrieved context. It is incorrect.

Cleanlab auto-flags this response as bad (escalated to SME), and searches for an expert answer in the Cleanlab Project. Since one is available for a similar query in the Cleanlab Project, this expert answer is returned to the user.

conversation_history = []

query = "Can I ask a question about the Simple Water Bottle?"

conversation_history = run_validation(query, conversation_history, project, verbosity=0)

query = "How much water can it hold?"

conversation_history = run_validation(query, conversation_history, project, verbosity=1)

Updating assistant responses with expert answers

In a conversational setting, we want to update the conversation_history with the final_response provided by Cleanlab instead of the initial RAG response to track only the highest quality conversation.

Notice how the (incorrect) initial RAG response “The Simple Water Bottle can hold 16 oz of Water” is replaced with the expert answer from Cleanlab of “32oz”.

Advanced Usage

You can configure many aspects of the bad response detection like what score thresholds to use inside the Cleanlab Web App.

Response Quality Thresholds

Thresholds determine when a response needs intervention:

-

A metric (trustworthiness, pii_detection, etc.) can be defined as an Evaluation, Guardrail , or both.

-

Each metric has its own threshold (0-1) and a defined threshold direction (below/above).

-

If any Evaluation’s score violates the threshold condition, the response is escalated to an SME and an expert answer is attempted to be returned.

-

If any Guardrail’s score violates the threshold condition,

Project.validate()returns with theshould_guardrail=Trueflag and no other action is taken. -

Example: With Evaluation metric Hallucination (trustworthiness) threshold set to “below 0.85”

- Score 0.80 -> Escalated to an SME

- Score 0.90 -> Passes validation

Setting thresholds affects your validation strategy:

- Higher thresholds (e.g. 0.9) = Stricter validation

- More responses marked as “should_escalate”

- More queries logged for SMEs to answer

- Better response quality but higher SME workload

- Lower thresholds (e.g. 0.7) = More lenient validation

- Fewer responses marked as “should_escalate”

- Fewer queries logged for SMEs to answer

- Lower SME workload, but may allow lower quality responses from your RAG app to be returned unremediated.

Configure Custom Evaluations and Guardrails

You can configure these directly in the Cleanlab AI Platform Web UI. For details, see the Adding custom guardrails section in our other tutorial.

Rewritten Queries

If your Conversational RAG pipeline already includes a query rewriter, you can save compute by passing the rewritten query directly to the Project.validate() method. A rewritten_query should be a reformulation of the original query made self-contained with respect to multi-turn conversations to improve retrieval quality.

Simply provide it as the rewritten_query argument like so:

# After discussing France in previous conversation turns...

# Current query lacks context from the conversation

query = "What is its capital?"

# Rewritten query adds necessary context for better retrieval

rewritten_query = "What is the capital of France?"

results = project.validate(

messages=messages,

response=response,

query=query,

context=context,

rewritten_query=rewritten_query

)

Logging Additional Information

When project.validate() returns results indicating a response should be escalated to an SME, it logs the query into your Cleanlab Project. By default, this log automatically includes the evaluation scores (like trustworthiness), the context and LLM response.

You can include additional information that would be helpful for Subject Matter Experts (SMEs) when they review the logged queries in the Cleanlab Project later.

To add any extra information, simply pass in any key-value pairs into the metadata parameter in the validate() method. For example, you can add the location the Query came from like so:

metadata = {"location": "USA"}

results = project.validate(

messages=messages,

response=response,

query=query,

context=context,

metadata=metadata,

)

Next Steps

Now that Cleanlab is integrated with your Multi-turn Chat App, you and SMEs can open the Codex Project and answer questions logged there to continuously improve your AI.

This tutorial demonstrated how to use Cleanlab to automatically detect and remediate bad responses in any RAG application. Cleanlab provides a robust way to evaluate response quality and automatically fetch expert answers when needed. For responses that don’t meet quality thresholds, Cleanlab automatically logs the queries for SME review.

Note: Automatic detection and remediation of bad responses for a Single-turn Q&A app is covered in the Detect and Remediate bad responses in Single-turn Q&A App Tutorial

Adding Cleanlab only improves your RAG app. Once integrated, it automatically identifies problematic responses and either remediates them with expert answers or logs them for review. Using a simple web interface, SMEs at your company can answer the highest priority questions in the Cleanlab Project. As soon as an answer is entered in Cleanlab, your RAG app will be able to properly handle all similar questions encountered in the future.

The Cleanlab AI Platform is the fastest way for nontechnical SMEs to directly improve your RAG system. As the Developer, you simply integrate Cleanlab once, and from then on, SMEs can continuously improve how your system handles common user queries without needing your help.

Need help, more capabilities, or other deployment options?

Check the FAQ or email us at: support@cleanlab.ai