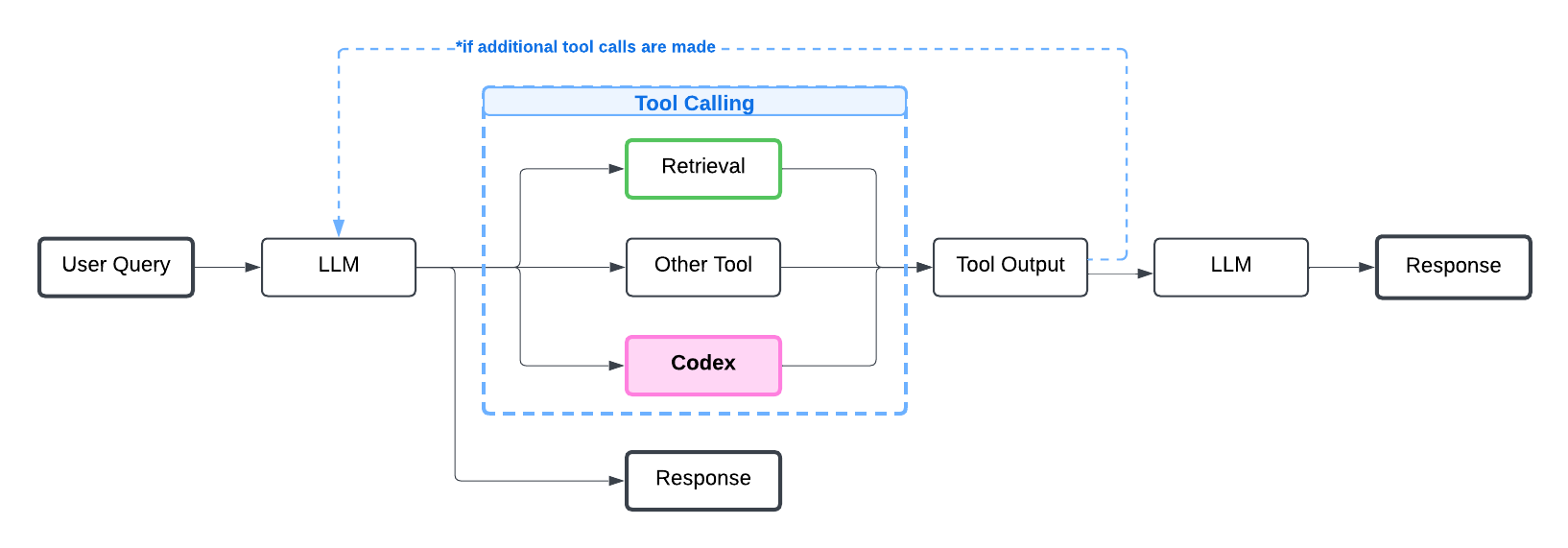

Integrate Codex-as-a-tool into OpenAI Assistants

This notebook assumes you have an OpenAI Assistants application that can handle tool calls. Learn how to build such an app via our tutorial: Agentic RAG with OpenAI Assistants tutorial.

Once you have an OpenAI Assistant running, adding Codex as an additional tool takes minimal effort but leads to guaranteed performance increase.

If you prefer to implement Codex without adding tool calls to your Assistant, check out our tutorial: Integrate Codex as-a-Backup with OpenAI Assistants.

Let’s first install packages required for this tutorial.

%pip install openai # we used package-version 1.59.7

%pip install --upgrade cleanlab_codex

Optional: Helper methods for an OpenAI Assistants app from prior tutorial (Agentic RAG with OpenAI Assistants)

from datetime import datetime

from openai import OpenAI

from io import BytesIO

import json

from openai.types.beta.threads import Run

from openai.types.beta.assistant import Assistant

from openai.types.beta.assistant_tool_param import AssistantToolParam

from openai.types.beta.thread import Thread

from openai.types.beta.threads.run import Run as RunObject

from openai.types.beta.threads.message_content import MessageContent

from openai.types.beta.threads.run_submit_tool_outputs_params import ToolOutput

import os

fallback_answer = "Based on the available information, I cannot provide a complete answer to this question."

system_prompt_without_codex = f"""For each question:

1. Start with file_search tool

2. If file_search results are incomplete/empty:

- Inform the user about insufficient file results

- Use get_todays_date for additional information if the answer to the question requires today's date

- Present get_todays_date findings without citations

Only use citations (【source】) for information found directly in files via file_search.

Do not abstain from answering without trying both tools. When you do, say: "{fallback_answer}", nothing else."""

def get_todays_date(date_format: str) -> str:

"""A tool that returns today's date in the date format requested."""

datetime_str = datetime.now().strftime(date_format)

return datetime_str

todays_date_tool_json = {

"type": "function",

"function": {

"name": "get_todays_date",

"description": "A tool that returns today's date in the date format requested. Options are: 'YYYY-MM-DD', 'DD', 'MM', 'YYYY'.",

"parameters": {

"type": "object",

"properties": {

"date_format": {

"type": "string",

"enum": ["%Y-%m-%d", "%d", "%m", "%Y"],

"default": "%Y-%m-%d",

"description": "The date format to return today's date in."

}

},

"required": ["date_format"],

}

}

}

DEFAULT_FILE_SEARCH: AssistantToolParam = {"type": "file_search"}

def create_rag_assistant(client: OpenAI, instructions: str, tools: list[AssistantToolParam]) -> Assistant:

"""Create and configure a RAG-enabled assistant."""

assert any(tool["type"] == "file_search" for tool in tools), "File search tool is required"

return client.beta.assistants.create(

name="RAG Assistant",

instructions=instructions,

model="gpt-4o-mini",

tools=tools,

)

def load_documents(client: OpenAI):

# Create a vector store

vector_store = client.beta.vector_stores.create(name="Simple Context")

# This is a highly simplified way to provide document content

# In a real application, you would likely:

# - Read documents from files on disk

# - Download documents from a database or cloud storage

# - Process documents from various sources (PDFs, web pages, etc.)

documents = {

"simple_water_bottle.txt": "Simple Water Bottle - Amber (limited edition launched Jan 1st 2025)\n\nA water bottle designed with a perfect blend of functionality and aesthetics in mind. Crafted from high-quality, durable plastic with a sleek honey-colored finish.\n\nPrice: $24.99 \nDimensions: 10 inches height x 4 inches width",

}

# Ready the files for upload to OpenAI

file_objects = []

for doc_name, doc_content in documents.items():

# Create BytesIO object from document content

file_object = BytesIO(doc_content.encode("utf-8"))

file_object.name = doc_name

file_objects.append(file_object)

# Upload files to vector store

client.beta.vector_stores.file_batches.upload_and_poll(

vector_store_id=vector_store.id,

files=file_objects

)

return vector_store

class ToolRegistry:

"""Registry for tool implementations"""

def __init__(self):

self._tools = {}

def register_tool(self, tool_name: str, handler):

"""Register a tool handler function"""

self._tools[tool_name] = handler

def get_handler(self, tool_name: str):

"""Get the handler for a tool"""

return self._tools.get(tool_name)

def __contains__(self, tool_name: str) -> bool:

"""Allow using 'in' operator to check if tool exists"""

return tool_name in self._tools

class RAGChat:

def __init__(self, client: OpenAI, assistant_id: str, tool_registry: ToolRegistry):

self.client = client

self.assistant_id = assistant_id

self.tool_registry = tool_registry

# Create a thread for the conversation

self.thread: Thread = self.client.beta.threads.create()

def _handle_tool_calls(self, run: RunObject) -> list[ToolOutput]:

"""Handle tool calls from the assistant."""

if not run.required_action or not run.required_action.submit_tool_outputs:

return []

tool_outputs: list[ToolOutput] = []

for tool_call in run.required_action.submit_tool_outputs.tool_calls:

function_name = tool_call.function.name

function_args = json.loads(tool_call.function.arguments)

if function_name in self.tool_registry:

print(f"[internal log] Calling tool: {function_name} with args: {function_args}")

handler = self.tool_registry.get_handler(function_name)

if handler is None:

raise ValueError(f"No handler found for called tool: {function_name}")

output = handler(**function_args)

else:

output = f"Unknown tool: {function_name}"

tool_outputs.append({

"tool_call_id": tool_call.id,

"output": output

})

return tool_outputs

def _get_message_text(self, content: MessageContent) -> str:

"""Extract text from message content."""

if hasattr(content, 'text'):

return content.text.value

return "Error: Message content is not text"

def chat(self, user_message: str) -> str:

"""Process a user message and return the assistant's response."""

# Add the user message to the thread

self.client.beta.threads.messages.create(

thread_id=self.thread.id,

role="user",

content=user_message

)

# Create a run

run: Run = self.client.beta.threads.runs.create(

thread_id=self.thread.id,

assistant_id=self.assistant_id

)

# Wait for run to complete and handle any tool calls

while True:

run = self.client.beta.threads.runs.retrieve(

thread_id=self.thread.id,

run_id=run.id

)

if run.status == "requires_action":

# Handle tool calls

tool_outputs = self._handle_tool_calls(run)

# Submit tool outputs

run = self.client.beta.threads.runs.submit_tool_outputs(

thread_id=self.thread.id,

run_id=run.id,

tool_outputs=tool_outputs

)

elif run.status == "completed":

# Get the latest message

messages = self.client.beta.threads.messages.list(

thread_id=self.thread.id

)

if messages.data:

return self._get_message_text(messages.data[0].content[0])

return "Error: No messages found"

elif run.status in ["failed", "expired"]:

return f"Error: Run {run.status}"

def add_vector_store_to_assistant(client: OpenAI, assistant, vector_store):

assistant = client.beta.assistants.update(

assistant_id=assistant.id,

tool_resources={"file_search": {"vector_store_ids": [vector_store.id]}},

)

return assistant

Example RAG App: Product Customer Support

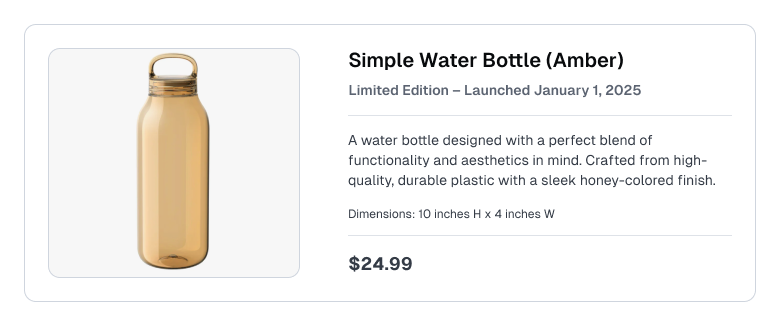

Let’s revisit our OpenAI Assistants application built in the tutorial: Agentic RAG with OpenAI Assistants, which has the option to call a get_todays_date() tool. This example represents a customer support / e-commerce use-case where the Knowledge Base contains product listings like the following:

For simplicity, our Assistant’s Knowledge Base here only contains a single document featuring this one product description.

Lets intialize our OpenAI client, and then integrate Codex to improve this Assistant’s responses.

from openai import OpenAI

import os

os.environ["OPENAI_API_KEY"] = "<YOUR-KEY-HERE>" # Replace with your OpenAI API key

model = "gpt-4o" # model used by RAG system

client = OpenAI() # API key is read from the OPENAI_API_KEY environment variable

Create Codex Project

To use Codex, first create a Project.

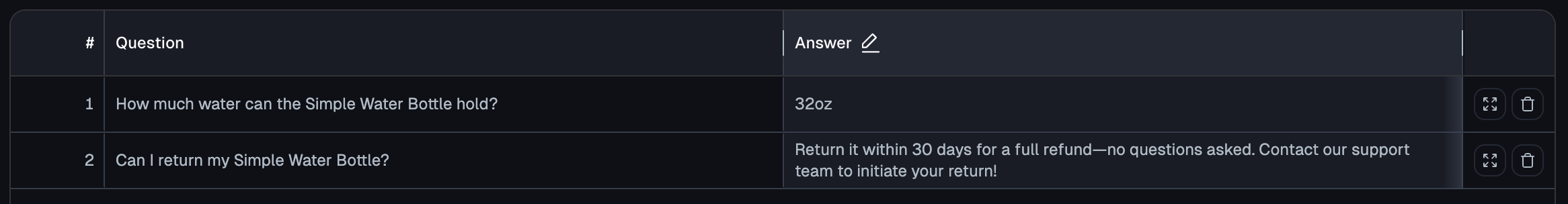

Here we assume some common (question, answer) pairs about the Simple Water Bottle have already been added to a Codex Project. Learn how that was done via our tutorial: Populating Codex.

Our existing Codex Project contains the following entries:

access_key = "<YOUR-PROJECT-ACCESS-KEY>" # Obtain from your Project's settings page: https://codex.cleanlab.ai/

Integrate Codex as an additional tool

You only need to make minimal changes to your code to include Codex as an additional tool:

- Add Codex to the list of tools provided to the Assistant.

- Update your system prompt to include instructions for calling Codex, as demonstrated below in:

system_prompt_with_codex.

After that, call your original Assistant with these updated variables to start experiencing the benefits of Codex!

from cleanlab_codex import CodexTool

codex_tool = CodexTool.from_access_key(access_key=access_key, fallback_answer=fallback_answer)

codex_tool_openai = codex_tool.to_openai_tool()

# Add Codex to the list of available tools for our RAGChat class

tool_registry = ToolRegistry()

tool_registry.register_tool(codex_tool.tool_name, codex_tool.query)

tool_registry.register_tool('get_todays_date', get_todays_date) # register other tools here

# Update the RAG system prompt with instructions for handling Codex (adjust based on your needs)

system_prompt_with_codex = f"""For each question:

1. Start with file_search tool

2. If file_search results are incomplete/empty:

- Inform the user about insufficient file results

- Then use {codex_tool.tool_name} for additional information

- Present {codex_tool.tool_name} findings without citations

- Use get_todays_date for additional information if the answer to the question requires today's date

- Present get_todays_date findings without citations

Only use citations (【source】) for information found directly in files via file_search.

Do not abstain from answering without trying all tools. When you do, say: "{fallback_answer}", nothing else."""

# Create Assistant that is integrated with Codex

vector_store = load_documents(client)

assistant = create_rag_assistant(client, system_prompt_with_codex, [DEFAULT_FILE_SEARCH, todays_date_tool_json, codex_tool_openai])

assistant = add_vector_store_to_assistant(client, assistant, vector_store)

rag_with_codex = RAGChat(client, assistant.id, tool_registry)

Optional: Create another version of the Assistant without Codex (rag_without_codex)

vector_store = load_documents(client)

tool_registry = ToolRegistry()

tool_registry.register_tool('get_todays_date', get_todays_date)

assistant = create_rag_assistant(client, system_prompt_without_codex, [DEFAULT_FILE_SEARCH, todays_date_tool_json])

assistant = add_vector_store_to_assistant(client, assistant, vector_store)

rag_without_codex = RAGChat(client, assistant.id, tool_registry)

Note: This tutorial uses a Codex tool description provided in OpenAI format via the to_openai_tool() function. You can instead manually write the Codex tool description yourself or import it in alternate provided formats.

In Agentic RAG systems like OpenAI Assistants, retrieval is treated as yet another tool call (called file_search for OpenAI Assistants). Our system prompt should carefully instruct how/when the AI should use the Codex tool vs. other available tools. For OpenAI Assistants, we recommend instructing the AI to only consider the Codex tool after it has used file_search and is still unsure how to answer.

RAG with Codex in action

Integrating Codex as-a-Tool allows your RAG app to answer more questions than it was originally capable of.

Example 1

Let’s ask a question to our original RAG app (before Codex was integrated).

user_question = "Can I return my simple water bottle?"

rag_without_codex.chat(user_question)

The original RAG app is unable to answer, in this case because the required information is not in its Knowledge Base.

Let’s ask the same question to our RAG app with Codex added as an additional tool. Note that we use the updated system prompt and tool list when Codex is integrated in the RAG app.

rag_with_codex.chat(user_question)

As you see, integrating Codex enables your RAG app to answer questions it originally strugged with, as long as a similar question was already answered in the corresponding Codex Project.

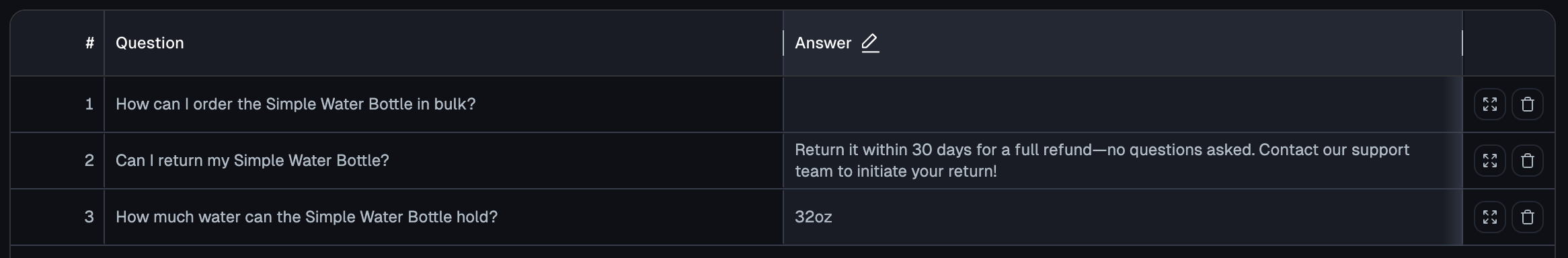

Example 2

Let’s ask another question to our RAG app with Codex integrated.

user_question = "How can I order the Simple Water Bottle in bulk?"

rag_with_codex.chat(user_question)

Our RAG app is unable to answer this question because there is no relevant information in its Knowledge Base, nor has a similar question been answered in the Codex Project (see the contents of the Codex Project above).

Codex automatically recognizes this question could not be answered and logs it into the Project where it awaits an answer from a SME. Navigate to your Codex Project in the Web App where you (or a SME at your company) can enter the desired answer for this query.

As soon as an answer is provided in Codex, our RAG app will be able to answer all similar questions going forward (as seen for the previous query).

Example 3

Let’s ask another query to our RAG app with Codex integrated. This is a query the original RAG app was able to correctly answer without Codex (since the relevant information exists in the Knowledge Base).

user_question = "How big is the water bottle?"

rag_with_codex.chat(user_question)

We see that the RAG app with Codex integrated is still able to correctly answer this query. Integrating Codex has no negative effect on questions your original RAG app could answer.

Next Steps

Now that Codex is integrated with your RAG app, you and SMEs can open the Codex Project and answer questions logged there to continuously improve your AI.

Adding Codex only improves your AI Assistant. Once integrated, Codex automatically logs all user queries that your original AI Assistant handles poorly. Using a simple web interface, SMEs at your company can answer the highest priority questions in the Codex Project. As soon as an answer is entered in Codex, your AI Assistant will be able to properly handle all similar questions encountered in the future.

Codex is the fastest way for nontechnical SMEs to directly improve your AI application. As the Developer, you simply integrate Codex once, and from then on, SMEs can continuously improve how your AI handles common user queries without needing your help.

Need help, more capabilities, or other deployment options? Check the FAQ or email us at: support@cleanlab.ai