Integrate Codex as-a-Tool with LangChain

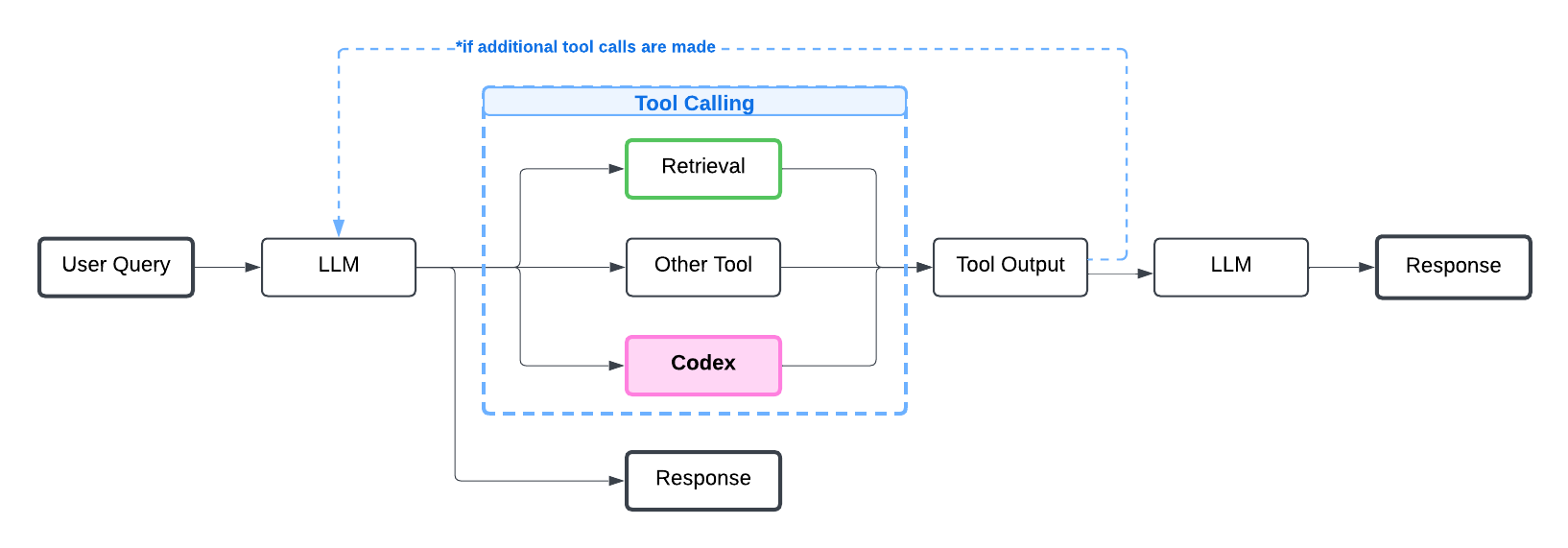

We demonstrate how to integrate Codex with a conversational agentic RAG application built with Langchain.

This tutorial presumes your RAG app already supports tool calls. Learn how to add tool calls to any LangChain application via our tutorial: RAG with Tool Calls in LangChain.

Once you have a RAG app that supports tool calling, adding Codex as an additional Tool takes minimal effort but guarantees better responses from your AI application.

If you want to integrate Codex without adding tool calls to your application, check out our other integrations.

Let’s first install packages required for this tutorial.

%pip install langchain-text-splitters langchain-community langgraph langchain-openai # we used package-versions 0.3.5, 0.3.16, 1.59.7, 0.3.2

%pip install --upgrade cleanlab-codex

import os

os.environ["OPENAI_API_KEY"] = "<YOUR-KEY-HERE>" # Replace with your OpenAI API key

Optional: Helper methods for basic RAG from prior tutorial (Adding Tool Calls to RAG)

from langchain_core.tools import tool

from datetime import datetime

@tool

def get_todays_date(date_format: str) -> str:

"A tool that returns today's date in the date format requested. Options for date_format parameter are: '%Y-%m-%d', '%d', '%m', '%Y'."

datetime_str = datetime.now().strftime(date_format)

return datetime_str

@tool

def retrieve(query: str) -> str:

"""Search through available documents to find relevant information."""

docs = vector_store.similarity_search(query, k=2)

return "\n\n".join(doc.page_content for doc in docs)

embedding_model = "text-embedding-3-small" # any LangChain embeddings model

fallback_answer = "Based on the available information, I cannot provide a complete answer to this question."

system_message_without_codex = f"""You are a helpful assistant designed to help users navigate a complex set of documents for question-answering tasks. Answer the user's Question based on the following possibly relevant Context and previous chat history using the tools provided if necessary. Follow these rules in order:

1. NEVER use phrases like "according to the context", "as the context states", etc. Treat the Context as your own knowledge, not something you are referencing.

2. Use only information from the provided Context.

3. Give a clear, short, and accurate Answer. Explain complex terms if needed.

4. You have access to the retrieve tool, to retrieve relevant information to the query as Context.

5. If the answer to the question requires today's date, use the following tool: get_todays_date. Return the date in the exact format the tool provides it.

6. If the Context doesn't adequately address the Question or you are unsure how to answer the Question, say: "{fallback_answer}" only, nothing else.

Remember, your purpose is to provide information based on the Context, not to offer original advice.

"""

from langchain_openai import ChatOpenAI

from langchain_openai import OpenAIEmbeddings

from langchain_core.documents import Document

from langchain_core.vectorstores import InMemoryVectorStore

from langchain_text_splitters import RecursiveCharacterTextSplitter

from langchain_core.tools import BaseTool

from langchain_core.messages import (

HumanMessage,

SystemMessage,

BaseMessage,

)

from typing import List, Optional

# Initialize vector store

embeddings = OpenAIEmbeddings(model=embedding_model)

vector_store = InMemoryVectorStore(embeddings)

# Sample document to demonstrate Codex integration

product_page_content = """Simple Water Bottle - Amber (limited edition launched Jan 1st 2025)

A water bottle designed with a perfect blend of functionality and aesthetics in mind. Crafted from high-quality, durable plastic with a sleek honey-colored finish.

Price: $24.99 \nDimensions: 10 inches height x 4 inches width"""

documents =[

Document(

id="simple_water_bottle.txt",

page_content=product_page_content,

),

]

# Standard LangChain text splitting - use any splitter that fits your docs

text_splitter = RecursiveCharacterTextSplitter(chunk_size=1000, chunk_overlap=200)

all_splits = text_splitter.split_documents(documents)

# Add documents to your chosen vector store

_ = vector_store.add_documents(documents=all_splits)

class RAGApp:

def __init__(

self,

llm: ChatOpenAI,

tools: List[BaseTool],

retriever: BaseTool,

messages: Optional[List[BaseMessage]] = None

):

"""Initialize RAG application with provided components."""

_tools = [retriever] + tools

self.tools = {tool.name: tool for tool in _tools}

self.llm = llm.bind_tools(_tools)

self.messages: List[BaseMessage] = messages or []

def chat(self, user_query: str) -> str:

"""Process user input and handle any necessary tool calls."""

# Add user query to messages

self.messages.append(HumanMessage(content=user_query))

# Get initial response (may include tool calls)

print(f"[internal log] Invoking LLM text\n{user_query}\n\n")

response = self.llm.invoke(self.messages)

self.messages.append(response)

# Handle any tool calls

while response.tool_calls:

# Process each tool call

for tool_call in response.tool_calls:

# Get the appropriate tool

tool = self.tools[tool_call["name"].lower()]

# Call the tool and get result

tool_name = tool_call["name"]

tool_args = tool_call["args"]

print(f"[internal log] Calling tool: {tool_name} with args: {tool_args}")

tool_result = tool.invoke(tool_call)

print(f'[internal log] Tool response: {str(tool_result)}')

self.messages.append(tool_result)

# Get next response after tool calls

response = self.llm.invoke(self.messages)

self.messages.append(response)

return response.content

Example: Customer Service for a New Product

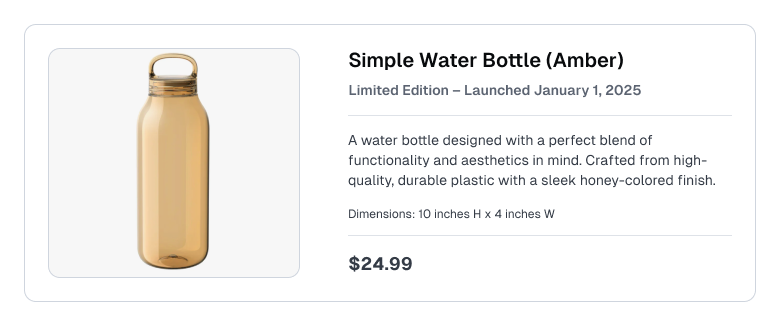

Let’s revisit our RAG app built in the RAG With Tool Calls in LangChain tutorial, which has the option to call a get_todays_date() tool. This example represents a customer support / e-commerce use-case where the Knowledge Base contains product listings like the following:

The details of this example RAG app are unimportant if you are already familiar with RAG and Tool Calling, otherwise refer to the RAG With Tool Calls in LangChain tutorial. That tutorial walks through the RAG app defined above. Subsequently, we integrate Codex-as-a-Tool and demonstrate its benefits.

generation_model = "gpt-4o" # model used by RAG system (has to support tool calling)

embedding_model = "text-embedding-3-small" # any LangChain embeddings model

llm = ChatOpenAI(model=generation_model)

Create Codex Project

To use Codex, first create a Project in the Web App. That is covered in our tutorial: Getting Started with the Codex Web App.

Here we assume some common (question, answer) pairs about the Simple Water Bottle have already been added to a Codex Project. To learn how that was done, see our tutorial: Populating Codex.

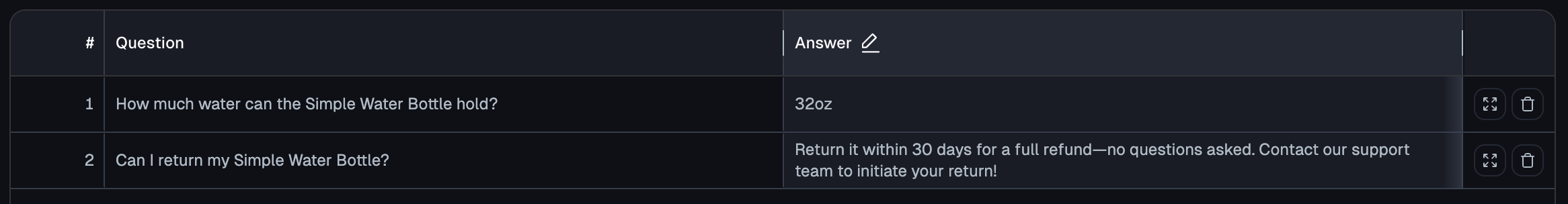

Our existing Codex Project contains the following entries:

access_key = "<YOUR-PROJECT-ACCESS-KEY>" # Obtain from your Project's settings page: https://codex.cleanlab.ai/

Integrate Codex as an additional tool

Integrating Codex into a RAG app that supports tool calling requires minimal code changes:

- Import Codex and add it into your list of

tools. - Update your system prompt to include instructions for calling Codex, as demonstrated below in:

system_prompt_with_codex.

After that, call your original RAG pipeline with these updated variables to start experiencing the benefits of Codex!

# 1: Import CodexTool

from cleanlab_codex import CodexTool

codex_tool = CodexTool.from_access_key(access_key=access_key, fallback_answer=fallback_answer)

codex_tool_langchain = codex_tool.to_langchain_tool()

globals()[codex_tool.tool_name] = codex_tool.query # Optional step for convenience: make function to call the tool globally accessible

# 2: Update the RAG system prompt with instructions for handling Codex (adjust based on your needs)

system_message_with_codex = f"""You are a helpful assistant designed to help users navigate a complex set of documents for question-answering tasks. Answer the user's Question based on the following possibly relevant Context and previous chat history using the tools provided if necessary. Follow these rules in order:

1. NEVER use phrases like "according to the context", "as the context states", etc. Treat the Context as your own knowledge, not something you are referencing.

2. Use only information from the provided Context.

3. Give a clear, short, and accurate Answer. Explain complex terms if needed.

4. You have access to the retrieve tool, to retrieve relevant information to the query as Context. Prioritize using this tool to answer the user's question.

5. If the answer to the question requires today's date, use the following tool: get_todays_date. Return the date in the exact format the tool provides it.

6. When the Context does not answer the user's Question, call the `{codex_tool.tool_name}` tool.

- Always use `{codex_tool.tool_name}` if the provided Context lacks the necessary information.

- Your query to `{codex_tool.tool_name}` should closely match the user’s original Question, with only minor clarifications if needed.

- Evaluate the response from `{codex_tool.tool_name}`. If the response is helpful, use it to answer the user’s Question. If the response is not helpful, ignore it.

7. If you still cannot confidently answer the user's Question (even after using `{codex_tool.tool_name}` and other tools), say: "{fallback_answer}".

Remember, your purpose is to provide information based on the Context and make effective use of `{codex_tool.tool_name}` when necessary, not to offer original advice.

"""

# 3: Initialize RAGApp with the CodexTool

llm = ChatOpenAI(model=generation_model)

rag_with_codex = RAGApp(

llm=llm,

tools=[get_todays_date, codex_tool_langchain], # Add Codex to list of tools

retriever=retrieve,

messages=[SystemMessage(content=system_message_with_codex)] # Add system message with Codex instructions

)

Optional: Initialize RAG App without CodexTool

rag_without_codex = RAGApp(

llm=llm,

tools=[get_todays_date], # Add your tools here

retriever=retrieve,

messages=[SystemMessage(content=system_message_without_codex)]

)

RAG with Codex in action

Integrating Codex as-a-Tool allows your RAG app to answer more questions than it was originally capable of.

Example 1

Let’s ask a question to our original RAG app (before Codex was integrated).

response = rag_without_codex.chat("Can I return my Simple Water Bottle?")

print(f"\n[RAG response] {response}")

The original RAG app is unable to answer, in this case because the required information is not in its Knowledge Base.

Let’s ask the same question to our RAG app with Codex added as an additional tool. Note that we use the updated system prompt and tool list when Codex is integrated in the RAG app.

response = rag_with_codex.chat("Can I return my Simple Water Bottle?")

print(f"\n[RAG response] {response}")

As you see, integrating Codex enables your RAG app to answer questions it originally strugged with, as long as a similar question was already answered in the corresponding Codex Project.

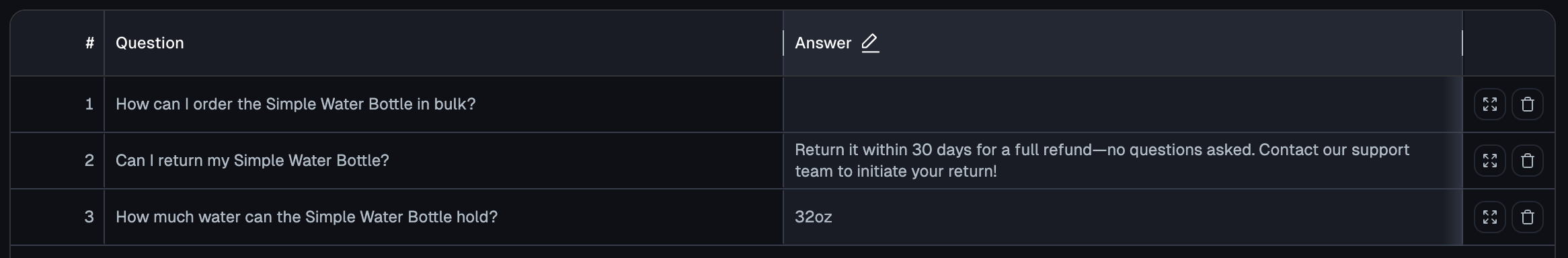

Example 2

Let’s ask another question to our RAG app with Codex integrated.

response = rag_with_codex.chat("How can I order the Simple Water Bottle in bulk?")

print(f"\n[RAG response] {response}")

Our RAG app is unable to answer this question because there is no relevant information in its Knowledge Base, nor has a similar question been answered in the Codex Project (see the contents of the Codex Project above).

Codex automatically recognizes this question could not be answered and logs it into the Project where it awaits an answer from a SME.

As soon as an answer is provided in Codex, our RAG app will be able to answer all similar questions going forward (as seen for the previous query).

Example 3

Let’s ask another query to our RAG app with Codex integrated. This is a query the original RAG app was able to correctly answer without Codex (since the relevant information exists in the Knowledge Base).

response = rag_with_codex.chat("How big is the water bottle?")

print(f"\n[RAG response] {response}")

We see that the RAG app with Codex integrated is still able to correctly answer this query. Integrating Codex has no negative effect on questions your original RAG app could answer.

Next Steps

Now that Codex is integrated with your RAG app, you and SMEs can open the Codex Project and answer questions logged there to continuously improve your AI.

Adding Codex only improves your RAG app. Once integrated, Codex automatically logs all user queries that your original RAG app handles poorly. Using a simple web interface, SMEs at your company can answer the highest priority questions in the Codex Project. As soon as an answer is entered in Codex, your RAG app will be able to properly handle all similar questions encountered in the future.

Codex is the fastest way for nontechnical SMEs to directly improve your AI application. As the Developer, you simply integrate Codex once, and from then on, SMEs can continuously improve how your AI handles common user queries without needing your help.

Need help, more capabilities, or other deployment options? Check the FAQ or email us at: support@cleanlab.ai

Optional: View message history for deeper understanding

The full conversation history including tool calls can help you understand the internal steps. Here is the output:

rag_with_codex.messages