Integrate Codex as-a-Backup with AWS Bedrock Knowledge Bases

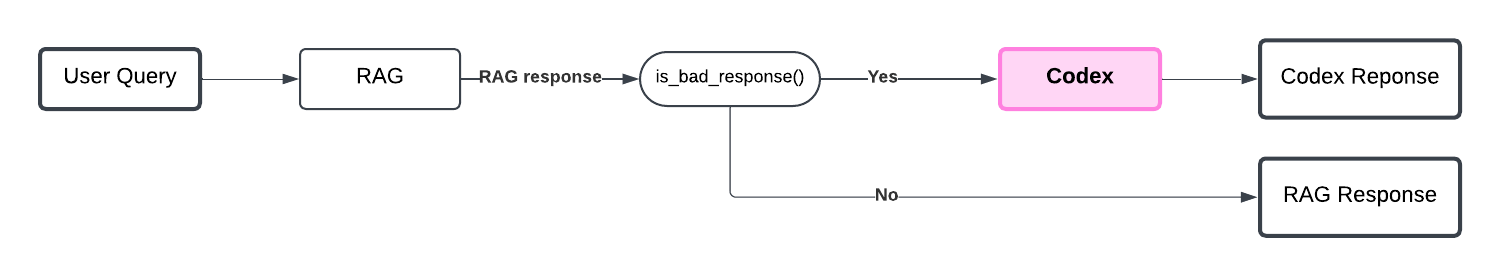

This notebook demonstrates how to integrate Codex as a backup to an existing RAG application using AWS Knowledge Bases via the AWS Bedrock Knowledge Bases and Converse APIs.

This is our recommended integration strategy for developers using AWS Knowledge Base. The integration is only a few lines of code and offers more control than integrating Codex as-a-Tool.

Let’s first install packages required for this tutorial.

# Install necessary AWS and Cleanlab packages

%pip install -U boto3 # we used package-version 1.36.0

Example RAG App: Customer Service for a New Product

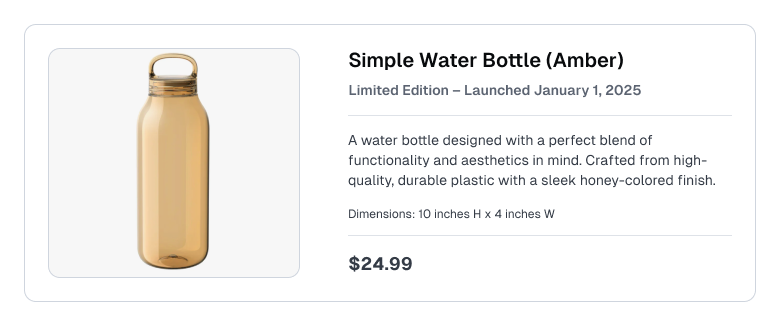

Consider a customer support use-case, where the RAG application is built on a Knowledge Base with product pages such as the following:

RAG with AWS Knowledge Bases

Let’s set up our Assistant! To keep this example simple, our Assistant’s Knowledge Base only has a single document containing the description of the product listed above.

Optional: Helper functions for AWS Knowledge Bases

import os

import boto3

from botocore.client import Config

os.environ['AWS_ACCESS_KEY_ID']= "<YOUR_AWS_ACCESS_KEY_ID>" # Your permament access key (not session access key)

os.environ['AWS_SECRET_ACCESS_KEY']='<YOUR_AWS_SECRET_ACCESS_KEY>' # Your permament secret access key (not session secret access key)

os.environ["MFA_DEVICE_ARN"] = "<YOUR_MFA_DEVICE_ARN>" # If your organization requires MFA, find this in AWS Console undeer: settings -> security credentials -> your mfa device

os.environ["AWS_REGION"] = "us-east-1" # Specify your AWS region

# Load environment variables

aws_access_key_id = os.getenv("AWS_ACCESS_KEY_ID")

aws_secret_access_key = os.getenv("AWS_SECRET_ACCESS_KEY")

region_name = os.getenv("AWS_REGION", "us-east-1") # Default to 'us-east-1' if not set

mfa_serial_number = os.getenv("MFA_DEVICE_ARN")

# Ensure required environment variables are set

if not all([aws_access_key_id, aws_secret_access_key, mfa_serial_number]):

raise EnvironmentError(

"Missing required environment variables. Ensure AWS_ACCESS_KEY_ID, "

"AWS_SECRET_ACCESS_KEY, and MFA_DEVICE_ARN are set."

)

# Prompt user for MFA code

mfa_token_code = input("Enter your MFA code: ")

# Create an STS client

sts_client = boto3.client(

"sts",

aws_access_key_id=aws_access_key_id,

aws_secret_access_key=aws_secret_access_key,

region_name=region_name,

)

try:

# Request temporary credentials

response = sts_client.get_session_token(

DurationSeconds=3600 * 24, # Valid for 24 hours

SerialNumber=mfa_serial_number,

TokenCode=mfa_token_code,

)

# Extract temporary credentials

temp_credentials = response["Credentials"]

temp_access_key = temp_credentials["AccessKeyId"]

temp_secret_key = temp_credentials["SecretAccessKey"]

temp_session_token = temp_credentials["SessionToken"]

# Create the Bedrock Agent Runtime client

client = boto3.client(

"bedrock-agent-runtime",

aws_access_key_id=temp_access_key,

aws_secret_access_key=temp_secret_key,

aws_session_token=temp_session_token,

region_name=region_name,

)

print("Bedrock client successfully created.")

except Exception as e:

print(f"Error creating Bedrock client: {e}")

Optional: Define helper methods for RAG Retrieval and generation and client creation(following AWS Knowledge Base format)

SCORE_THRESHOLD = (

0.3 # Similarity score threshold for retrieving context to use in our RAG app

)

def retrieve(query, knowledgebase_id, numberOfResults=3):

return BEDROCK_RETRIEVE_CLIENT.retrieve(

retrievalQuery={"text": query},

knowledgeBaseId=knowledgebase_id,

retrievalConfiguration={

"vectorSearchConfiguration": {

"numberOfResults": numberOfResults,

"overrideSearchType": "HYBRID",

}

},

)

def retrieve_and_get_contexts(query, kbId, numberOfResults=3, threshold=0.0):

retrieval_results = retrieve(query, kbId, numberOfResults)

contexts = []

for retrievedResult in retrieval_results["retrievalResults"]:

if retrievedResult["score"] >= threshold:

text = retrievedResult["content"]["text"]

if text.startswith("Document 1: "):

text = text[len("Document 1: ") :] # Remove prefix if present

contexts.append(text)

return contexts

def generate_text(

messages: list[dict],

model_id: str,

system_messages=[],

bedrock_client=None,

) -> list[dict]:

"""Generates text dynamically handling tool use within Amazon Bedrock.

Params:

messages: List of message history in the desired format.

model_id: Identifier for the Amazon Bedrock model.

tools: List of tools the model can call.

bedrock_client: Client to interact with Bedrock API.

Returns:

messages: Final updated list of messages including tool interactions and responses.

"""

# Make the initial call to the model

response = bedrock_client.converse(

modelId=model_id,

messages=messages,

system=system_messages,

)

output_message = response["output"]["message"]

stop_reason = response["stopReason"]

messages.append(output_message)

return messages

bedrock_config = Config(

connect_timeout=120, read_timeout=120, retries={"max_attempts": 0}

)

BEDROCK_RETRIEVE_CLIENT = boto3.client(

"bedrock-agent-runtime",

config=bedrock_config,

aws_access_key_id=temp_access_key,

aws_secret_access_key=temp_secret_key,

aws_session_token=temp_session_token,

region_name=region_name,

)

BEDROCK_GENERATION_CLIENT = boto3.client(

service_name="bedrock-runtime",

aws_access_key_id=temp_access_key,

aws_secret_access_key=temp_secret_key,

aws_session_token=temp_session_token,

region_name=region_name,

)

Once we have defined basic functionality to setup our Assistant, let’s implement a standard RAG app using the AWS Knowledge Bases retrieve, and Converse API.

Our application will be conversational, supporting multi-turn dialogues. A new dialogue is instantiated as a RAGChat object defined below.

To have the Assistant respond to each user message in the dialogue, simply call this object’s chat() method. The RAGChat class properly manages conversation history, retrieval, and LLM response-generation via the AWS Converse API.

Optional: Defining the RAG application, RAGChat

RAGChat

class RAGChat:

def __init__(self, model: str, kbId: str, client, system_message: str):

self.model = model

self.kbId = kbId

self.client = client

self.system_messages = [{"text": system_message}] # Store the system message

self.messages = [] # Initialize messages as an empty list

def chat(self, user_query: str) -> str:

"""Performs RAG (Retrieval-Augmented Generation) using the provided model and tools.

Params:

user_query: The user's question or query.

Returns:

Final response text generated by the model.

"""

# Retrieve contexts based on the user query and knowledge base ID

contexts = retrieve_and_get_contexts(

user_query, self.kbId, threshold=SCORE_THRESHOLD

)

context_strings = "\n\n".join(

[f"Context {i + 1}: {context}" for i, context in enumerate(contexts)]

)

# Construct the user message with the retrieved contexts

user_message = {

"role": "user",

"content": [{"text": f"{context_strings}\n\nQUESTION:\n{user_query}"}],

}

self.messages.append(user_message)

# Call generate_text with the updated messages

final_messages = generate_text(

messages=self.messages,

model_id=self.model,

system_messages=self.system_messages,

bedrock_client=self.client,

)

# Extract and return the final response text

return final_messages[-1]["content"][0]["text"]

Creating a Knowledge Base

To keep our example simple, we upload the product description to AWS S3 as a single file: simple_water_bottle.txt. This is the sole file our Knowledge Base will contain, but you can populate your actual Knowledge Base with many heterogeneous documents.

To create a Knowledge Base using Amazon Bedrock, refer to the official documentation.

After you’ve created it, add your KNOWLEDGE_BASE_ID below.

KNOWLEDGE_BASE_ID = "<YOUR-KNOWLEDGE_BASE_ID-HERE>" # replace with your own Knowledge Base

Setting up the application goes as follows:

# Model ARN for aws

model_id = "arn:aws:bedrock:us-east-1::foundation-model/anthropic.claude-3-5-sonnet-20240620-v1:0"

# Define instructions for assistant

fallback_answer = "Based on the available information, I cannot provide a complete answer to this question."

system_message = f"""Do not make up answers to questions if you cannot find the necessary information.

If you remain unsure how to accurately respond to the user after considering the available information and tools, then only respond with: "{fallback_answer}".

"""

# Create RAG application

rag = RAGChat(

model=model_id,

kbId=KNOWLEDGE_BASE_ID,

client=BEDROCK_GENERATION_CLIENT,

system_message=system_message,

)

At this point, you can chat with the Assistant via: rag.chat(your_query) as shown below. Before we demonstrate that, let’s first see how easy it is to integrate Codex.

Create Codex Project

To use Codex, first create a Project.

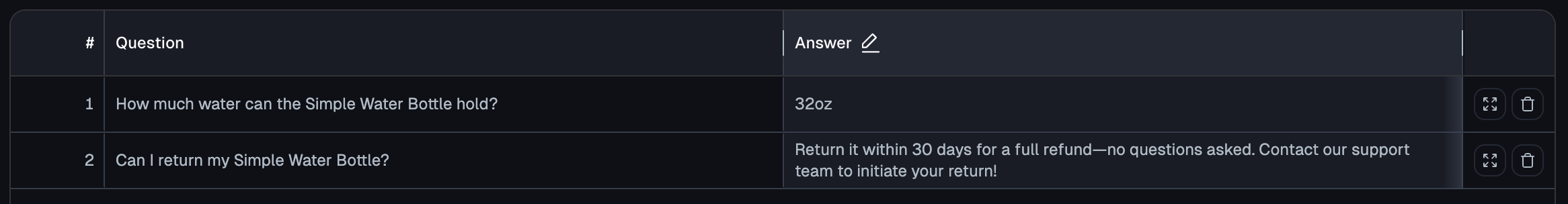

Here we assume some common (question, answer) pairs about the Simple Water Bottle have already been added to a Codex Project. To learn how that was done, see our tutorial: Populating Codex.

Our existing Codex Project contains the following entries:

Integrate Codex as-a-Backup

RAG apps unfortunately sometimes produce bad/unhelpful responses. Instead of providing these to your users, add Codex as a backup system that can automatically detect these cases and provide better answers.

Integrating Codex as-a-Backup just requires two steps:

- Configure the Codex backup system with your Codex Project credentials and settings that control what sort of responses are detected to be bad.

- Enhance your RAG app to:

- Use Codex to monitor whether each Assistant response is bad.

- Query Codex for a better answer when needed.

- Update the conversation with Codex’s answer when needed.

After that, call your enhanced RAG app just like the original app - Codex works automatically in the background.

Below is all the code needed to integrate Codex.

Optional: Define the RAG application with Codex as a Backup, RAGChatWithCodexBackup

RAGChatWithCodexBackupfrom cleanlab_codex import Project

from typing import Any, Dict, Optional

from cleanlab_codex.response_validation import is_bad_response

class RAGChatWithCodexBackup(RAGChat):

def __init__(

self,

model: str,

kbId: str,

client,

system_message: str,

codex_access_key: str,

is_bad_response_config: Optional[Dict[str, Any]] = None,

):

super().__init__(model, kbId, client, system_message)

self._codex_project = Project.from_access_key(codex_access_key)

self._is_bad_response_config = is_bad_response_config

def _replace_latest_message(self, new_message: str) -> None:

"""

Replaces the latest assistant message in the messages list with the provided content.

It is assumed that the latest assistant message is the last one with role 'assistant'.

"""

latest_message = self.messages[-1]

latest_message["content"] = [{"text": new_message}]

def chat(self, user_message: str) -> str:

response = super().chat(user_message)

kwargs = {"response": response, "query": user_message}

if self._is_bad_response_config is not None:

kwargs["config"] = self._is_bad_response_config

if is_bad_response(**kwargs):

codex_response: str | None = self._codex_project.query(user_message)[0]

if codex_response is not None:

self._replace_latest_message(codex_response)

response = codex_response

return response

Codex automatically detects when your RAG app needs backup. Here we provide a basic configuration for this detection that relies on Cleanlab’s Trustworthy Language Model and the fallback answer that we instructed the Assistant to output whenever it doesn’t know how to respond accurately. With this configuration, Codex will be consulted as-a-Backup whenever your Assistant’s response is estimated to be unhelpful.

Learn more about available detection methods and configurations via our tutorial: Codex as-a-Backup - Advanced Options.

from cleanlab_tlm import TLM

tlm_api_key = (

"<YOUR_TLM_API_KEY>" # Get your TLM API key here: https://tlm.cleanlab.ai/

)

access_key = "<YOUR_PROJECT_ACCESS_KEY>" # Available from your Project's settings page at: https://codex.cleanlab.ai/

is_bad_response_config = {

"tlm": TLM(api_key=tlm_api_key),

"fallback_answer": fallback_answer,

}

# Instantiate RAG app enhanced with Codex as-a-Backup

rag_with_codex = RAGChatWithCodexBackup(

model=model_id,

kbId=KNOWLEDGE_BASE_ID,

client=BEDROCK_GENERATION_CLIENT,

system_message=system_message,

codex_access_key=access_key,

is_bad_response_config=is_bad_response_config,

)

RAG with Codex in action

We can now ask user queries to our original RAG app (rag), as well as another version of this RAG app enhanced with Codex (rag_with_codex).

Example 1

Let’s ask a question to our original RAG app (before Codex was integrated).

user_question = "Can I return my simple water bottle?"

rag.chat(user_question)

The original RAG app is unable to answer, in this case because the required information is not in its Knowledge Base.

Let’s ask the same question to the RAG app enhanced with Codex.

rag_with_codex.chat(user_question)

As you see, integrating Codex enables your RAG app to answer questions it originally strugged with, as long as a similar question was already answered in the corresponding Codex Project.

Example 2

Let’s ask another question to our RAG app with Codex integrated.

user_question = "How can I order the Simple Water Bottle in bulk?"

rag.chat(user_question)

rag_with_codex.chat(user_question)

Our RAG app is unable to answer this question because there is no relevant information in its Knowledge Base, nor has a similar question been answered in the Codex Project (see the contents of the Codex Project above).

Codex automatically recognizes this question could not be answered and logs it into the Project where it awaits an answer from a SME. Navigate to your Codex Project in the Web App where you (or a SME at your company) can enter the desired answer for this query.

As soon as an answer is provided in Codex, our RAG app will be able to answer all similar questions going forward (as seen for the previous query).

Example 3

Let’s ask another query to our two RAG apps.

user_question = "How big is the water bottle?"

rag.chat(user_question)

The original RAG app was able to correctly answer without Codex (since the relevant information exists in the Knowledge Base).

rag_with_codex.chat(user_question)

We see that the RAG app with Codex integrated is still able to correctly answer this query. Integrating Codex has no negative effect on questions your original RAG app could answer.

Next Steps

Now that Codex is integrated with your RAG app, you and SMEs can open the Codex Project and answer questions logged there to continuously improve your AI.

This tutorial demonstrated how to easily integrate Codex as a backup system into any AWS Knowledge Bases application. Unlike tool calls which are harder to control, you can choose when to call Codex as a backup. For instance, you can use Codex to automatically detect whenever the Assistant produces hallucinations or unhelpful responses such as “I don’t know”.

Adding Codex only improves your RAG app. Once integrated, Codex automatically logs all user queries that your original RAG app handles poorly. Using a simple web interface, SMEs at your company can answer the highest priority questions in the Codex Project. As soon as an answer is entered in Codex, your RAG app will be able to properly handle all similar questions encountered in the future.

Codex is the fastest way for nontechnical SMEs to directly improve your AI Assistant. As the Developer, you simply integrate Codex once, and from then on, SMEs can continuously improve how your Assistant handles common user queries without needing your help.

Need help, more capabilities, or other deployment options?

Check the FAQ or email us at: support@cleanlab.ai