Integrate Codex as-a-Tool with AWS Bedrock Knowledge Bases

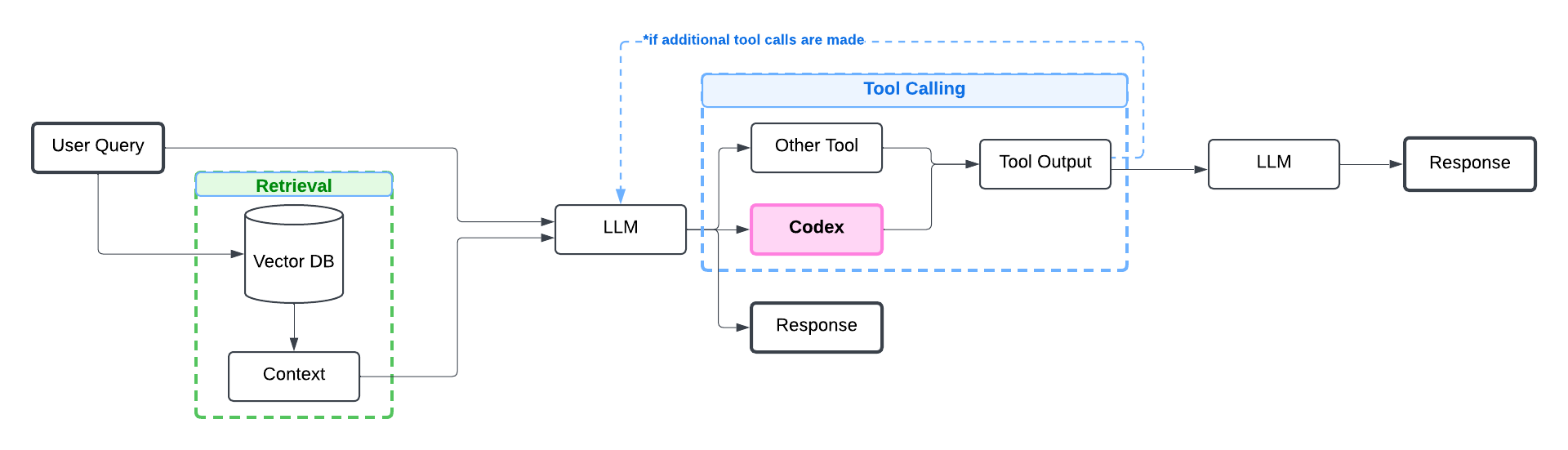

This tutorial assumes you have a RAG app that supports tool calls, built using AWS Bedrock Knowledge Bases. Learn how to add tool calls to your AWS RAG app via our tutorial: RAG with Tool Calls in AWS Knowledge Bases.

Once you have a RAG app that supports tool calling, adding Codex as an additional Tool takes minimal effort but guarantees better responses from your AI application.

If you prefer to integrate Codex without adding tool calls to your application, check out our tutorial: Integrate Codex as-a-Backup with AWS Knowledge Bases.

Let��’s first install packages required for this tutorial and set up required AWS configurations.

%pip install --upgrade cleanlab-codex

Optional: Set up AWS configurations

import os

import boto3

from botocore.client import Config

os.environ["AWS_ACCESS_KEY_ID"] = (

"<YOUR_AWS_ACCESS_KEY_ID>" # Your permament access key (not session access key)

)

os.environ["AWS_SECRET_ACCESS_KEY"] = (

"<YOUR_AWS_SECRET_ACCESS_KEY>" # Your permament secret access key (not session secret access key)

)

os.environ["MFA_DEVICE_ARN"] = (

"<YOUR_MFA_DEVICE_ARN>" # Find this in AWS Console under: settings -> security credentials -> your mfa device

)

os.environ["AWS_REGION"] = "us-east-1" # Specify your AWS region

aws_access_key_id = os.getenv("AWS_ACCESS_KEY_ID")

aws_secret_access_key = os.getenv("AWS_SECRET_ACCESS_KEY")

region_name = os.getenv("AWS_REGION", "us-east-1") # Default to 'us-east-1' if not set

mfa_serial_number = os.getenv("MFA_DEVICE_ARN")

if not all([aws_access_key_id, aws_secret_access_key, mfa_serial_number]):

raise EnvironmentError(

"Missing required environment variables. Ensure AWS_ACCESS_KEY_ID, "

"AWS_SECRET_ACCESS_KEY, and MFA_DEVICE_ARN are set."

)

# Enter MFA code in case your AWS organization requires it

mfa_token_code = input("Enter your MFA code: ")

print("MFA code entered:", mfa_token_code)

sts_client = boto3.client(

"sts",

aws_access_key_id=aws_access_key_id,

aws_secret_access_key=aws_secret_access_key,

region_name=region_name,

)

try:

# Request temporary credentials

response = sts_client.get_session_token(

DurationSeconds=3600 * 24, # Valid for 24 hours

SerialNumber=mfa_serial_number,

TokenCode=mfa_token_code,

)

temp_credentials = response["Credentials"]

temp_access_key = temp_credentials["AccessKeyId"]

temp_secret_key = temp_credentials["SecretAccessKey"]

temp_session_token = temp_credentials["SessionToken"]

# Create a Bedrock Agent Runtime client

client = boto3.client(

"bedrock-agent-runtime",

aws_access_key_id=temp_access_key,

aws_secret_access_key=temp_secret_key,

aws_session_token=temp_session_token,

region_name=region_name,

)

print("Bedrock client successfully created.")

except Exception as e:

print(f"Error creating Bedrock client: {e}")

Example RAG App: Product Customer Support

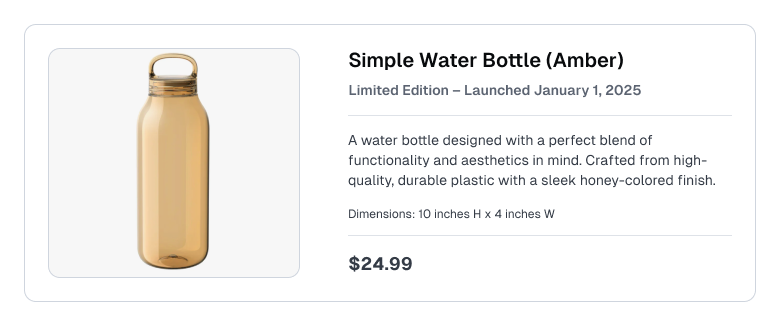

Let’s revisit our RAG app built in the RAG with Tool Calls in AWS Knowledge Bases tutorial, which has the option to call a get_todays_date() tool. This example represents a customer support / e-commerce use-case where the Knowledge Base contains product listings like the following:

The details of this minimal RAG app are unimportant if you are familiar with RAG and Tool Calling in AWS, otherwise refer to the RAG with Tool Calls in AWS Knowledge Bases tutorial. That tutorial walks through the helper methods defined below and how to set up a Knowledge Base. To keep our example minimal, we assume the product description text above has already been uploaded into a Knowledge Base for our RAG app. In practice, your Knowledge Base will have more documents/data than this single product description.

Optional: Helper methods from prior tutorial (RAG with Tool Calls in AWS Bedrock Knowledge Bases)

import json

from datetime import datetime

# Choices that govern how your AI behaves

fallback_answer = "Based on the available information, I cannot provide a complete answer to this question."

system_prompt_without_codex = f"""You are a helpful assistant designed to help users navigate a complex set of documents for question-answering tasks. Answer the user's Question based on the following possibly relevant Context and previous chat history using the tools provided if necessary. Follow these rules in order:

1. NEVER use phrases like "according to the context," "as the context states," etc. Treat the Context as your own knowledge, not something you are referencing.

2. Use only information from the provided Context. Your purpose is to provide information based on the Context, not to offer original advice.

3. Give a clear, short, and accurate answer. Explain complex terms if needed.

4. If the answer to the question requires today's date, use the following tool: get_todays_date. Return the date in the exact format the tool provides it.

5. If you remain unsure how to answer the Question then only respond with: "{fallback_answer}"

Remember, your purpose is to provide information based on the Context, not to offer original advice.

""".format(

fallback_answer=fallback_answer

)

# Define tool that is available for LLM to call

def get_todays_date(date_format: str) -> str:

"""A tool that returns today's date in the date format requested."""

datetime_str = datetime.now().strftime(date_format)

return datetime_str

todays_date_tool_json = {

"toolSpec": {

"name": "get_todays_date",

"description": "A tool that returns today's date in the date format requested. Options are: '%Y-%m-%d', '%d', '%m', '%Y'.",

"inputSchema": {

"json": {

"type": "object",

"properties": {

"date_format": {

"type": "string",

"description": "The format that the tool requests the date in."

}

},

"required": [

"date_format"

]

}

}

}

}

tool_config_without_codex = {

"tools": [todays_date_tool_json]

}

model = 'arn:aws:bedrock:us-east-1::foundation-model/anthropic.claude-3-haiku-20240307-v1:0' # Which LLM to use

KNOWLEDGE_BASE_ID = 'DASYAHIOKX' # See previous tutorial for how to set up the Knowledge Base

messages = [] # For you to later inspect logs of what happened (educational purposes), we'll track all conversation history by appending to this variable in each RAG call

# Setup retrieval for AWS Bedrock Knowledge Bases

bedrock_config = Config(connect_timeout=120, read_timeout=120, retries={'max_attempts': 0})

BEDROCK_RETRIEVE_CLIENT = boto3.client(

"bedrock-agent-runtime",

config=bedrock_config,

aws_access_key_id=temp_access_key,

aws_secret_access_key=temp_secret_key,

aws_session_token=temp_session_token,

region_name=region_name

)

BEDROCK_GENERATION_CLIENT = boto3.client(

service_name='bedrock-runtime',

aws_access_key_id=temp_access_key,

aws_secret_access_key=temp_secret_key,

aws_session_token=temp_session_token,

region_name=region_name

)

def retrieve(query, knowledgebase_id, numberOfResults=3):

return BEDROCK_RETRIEVE_CLIENT.retrieve(

retrievalQuery= {

'text': query

},

knowledgeBaseId=knowledgebase_id,

retrievalConfiguration= {

'vectorSearchConfiguration': {

'numberOfResults': numberOfResults,

'overrideSearchType': "HYBRID"

}

}

)

def retrieve_and_get_contexts(query, knowledgebase_id, numberOfResults=3):

retrieval_results = retrieve(query, knowledgebase_id, numberOfResults)

contexts = []

for retrievedResult in retrieval_results['retrievalResults']:

text = retrievedResult['content']['text']

if text.startswith("Document 1: "):

text = text[len("Document 1: "):]

contexts.append(text)

return contexts

# Methods for LLM response generation with Tool Calls (via AWS Converse API)

def form_prompt(user_question: str, contexts: list) -> str:

"""Forms the prompt to be used for querying the model."""

context_strings = "\n\n".join([f"Context {i + 1}: {context}" for i, context in enumerate(contexts)])

query_with_context = f"{context_strings}\n\nQUESTION:\n{user_question}"

# Below step is just formatting the final prompt for readability in the tutorial

indented_question_with_context = "\n".join(f" {line}" for line in query_with_context.splitlines())

return indented_question_with_context

def generate_text(user_question: str, model: str, tools: list[dict], system_prompts: list, messages: list[dict], bedrock_client) -> list[dict]:

"""Generates text dynamically handling tool use within Amazon Bedrock.

Params:

messages: List of message history in the desired format.

model: Identifier for the Amazon Bedrock model.

tools: List of tools the model can call.

bedrock_client: Client to interact with Bedrock API.

Returns:

messages: Final updated list of messages including tool interactions and responses.

"""

# Initial call to the model

response = bedrock_client.converse(

modelId=model,

messages=messages,

toolConfig=tools,

system=system_prompts,

)

output_message = response["output"]["message"]

stop_reason = response["stopReason"]

messages.append(output_message)

while stop_reason == "tool_use":

# Extract tool requests from the model response

tool_requests = output_message.get("content", [])

for tool_request in tool_requests:

if "toolUse" in tool_request:

tool = tool_request["toolUse"]

tool_name = tool["name"]

tool_input = tool["input"]

tool_use_id = tool["toolUseId"]

try:

# If you don't want the original question to be modified, use this instead

if 'question' in tool['input'].keys():

tool['input']['question'] = user_question

print(f"[internal log] Requesting tool {tool['name']}. with arguments: {tool_input}.")

tool_output_json = _handle_any_tool_call_for_stream_response(tool_name, tool_input)

tool_result = json.loads(tool_output_json)

print(f"[internal log] Tool response: {tool_result}")

# If tool call resulted in an error

if "error" in tool_result:

tool_result_message = {

"role": "user",

"content": [{"toolResult": {

"toolUseId": tool_use_id,

"content": [{"text": tool_result["error"]}],

"status": "error"

}}]

}

else:

# Format successful tool response

tool_result_message = {

"role": "user",

"content": [{"toolResult": {

"toolUseId": tool_use_id,

"content": [{"json": {"response": tool_result}}]

}}]

}

except Exception as e:

# Handle unexpected exceptions during tool handling

tool_result_message = {

"role": "user",

"content": [{"toolResult": {

"toolUseId": tool_use_id,

"content": [{"text": f"Error processing tool: {str(e)}"}],

"status": "error"

}}]

}

# Append the tool result to messages

messages.append(tool_result_message)

# Send the updated messages back to the model

response = bedrock_client.converse(

modelId=model,

messages=messages,

toolConfig=tools,

system=system_prompts,

)

output_message = response["output"]["message"]

stop_reason = response["stopReason"]

messages.append(output_message)

return messages

def _handle_any_tool_call_for_stream_response(function_name: str, arguments: dict) -> str:

"""Handles any tool dynamically by calling the function by name and passing in collected arguments.

Returns a dictionary of the tool output.

Returns error message if the tool is not found, not callable, or called incorrectly.

"""

tool_function = globals().get(function_name) or locals().get(function_name)

if callable(tool_function):

try:

# Dynamically call the tool function with arguments

tool_output = tool_function(**arguments)

return json.dumps(tool_output)

except Exception as e:

return json.dumps({

"error": f"Exception while calling tool '{function_name}': {str(e)}",

"arguments": arguments,

})

else:

return json.dumps({

"error": f"Tool '{function_name}' not found or not callable.",

"arguments": arguments,

})

To generate responses to user queries using the AWS APIs, we define a standard RAG method. See the RAG with Tool Calls in AWS Knowledge Bases tutorial for details. Subsequently, we integrate Codex-as-a-Tool and demonstrate its benefits.

Optional: RAG method from prior tutorial (RAG with Tool Calls in AWS Bedrock Knowledge Bases)

def rag(model: str, user_question: str, system_prompt: str, tools: list[dict], messages: list, knowledgebase_id: str) -> str:

"""Performs RAG (Retrieval-Augmented Generation) using the provided model and tools, via AWS Bedrock Knowledge Bases and the Converse API."""

contexts = retrieve_and_get_contexts(user_question, knowledgebase_id)

query_with_context = form_prompt(user_question, contexts)

print(f"[internal log] Invoking LLM with prompt + context\n{query_with_context}\n\n")

user_message = {"role": "user", "content": [{"text": query_with_context}]}

messages.append(user_message)

system_prompts = [{'text': system_prompt}]

final_messages = generate_text(user_question=user_question, model=model, tools=tools, system_prompts=system_prompts, messages=messages, bedrock_client=BEDROCK_GENERATION_CLIENT)

return final_messages[-1]["content"][-1]["text"]

Create Project in Codex Web App

To use Codex, you first need to create a Project.

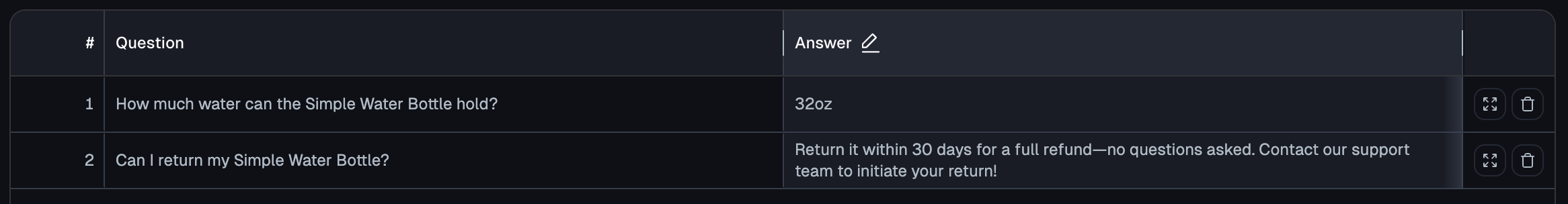

Here we assume some common (question, answer) pairs about the Simple Water Bottle have already been added to a Codex Project. To learn how that was done, see our tutorial: Populating Codex.

Our existing Codex Project contains the following entries:

access_key = "<YOUR-PROJECT-ACCESS-KEY>" # Obtain from your Project's settings page: https://codex.cleanlab.ai/projects/<YOUR-PROJECT-ID>/settings

Integrate Codex as an additional tool

Integrating Codex into a RAG app that supports tool calling requires minimal code changes:

- Import Codex and add it into your list of

tools. - Update your system prompt to include instructions for calling Codex, as demonstrated below in:

system_prompt_with_codex.

After that, call your original RAG pipeline with these updated variables to start experiencing the benefits of Codex!

Note: Here we obtain a Codex tool description in AWS-ready format via to_aws_converse_tool(). You can obtain the Codex tool description in other provided formats as well, or manually write it yourself to suit your needs.

from cleanlab_codex import CodexTool

codex_tool = CodexTool.from_access_key(access_key=access_key, fallback_answer=fallback_answer)

codex_tool_aws = codex_tool.to_aws_converse_tool()

globals()[codex_tool.tool_name] = (

codex_tool.query

) # Optional step for convenience: make function to call the tool globally accessible

tool_config_with_codex = {

"tools": [codex_tool_aws, todays_date_tool_json]

} # Add Codex to the list of tools that LLM can call

# Update the RAG system prompt with instructions for handling Codex (adjust based on your needs)

system_prompt_with_codex = f"""You are a helpful assistant designed to help users navigate a complex set of documents for question-answering tasks. Answer the user's Question based on the following possibly relevant Context and previous chat history using the tools provided if necessary. Follow these rules in order:

1. NEVER use phrases like "according to the context," "as the context states," etc. Treat the Context as your own knowledge, not something you are referencing.

2. Use only information from the provided Context. Your purpose is to provide information based on the Context, not to offer original advice.

3. Give a clear, short, and accurate answer. Explain complex terms if needed.

4. If you remain unsure how to answer the user query, then use the consult_codex tool to search for the answer. Always call consult_codex whenever the provided Context does not answer the user query. Do not call consult_codex if you already know the right answer or the necessary information is in the provided Context. Your query to consult_codex should match the user's original query, unless minor clarification is needed to form a self-contained query. After you have called consult_codex, determine whether its answer seems helpful, and if so, respond with this answer to the user. If the answer from consult_codex does not seem helpful, then simply ignore it.

5. If you remain unsure how to answer the Question (even after using the consult_codex tool and considering the provided Context), then only respond with: "{fallback_answer}"

""".format(fallback_answer=fallback_answer)

RAG with Codex in action

Integrating Codex as-a-Tool allows your RAG app to answer more questions than it was originally capable of.

Example 1

Let’s ask a question to our original RAG app (before Codex was integrated).

user_question = "Can I return my simple water bottle?"

rag_response = rag(model=model, user_question=user_question, system_prompt=system_prompt_without_codex, tools=tool_config_without_codex, messages=messages, knowledgebase_id=KNOWLEDGE_BASE_ID)

print(f'[RAG response] {rag_response}')

The original RAG app is unable to answer, in this case because the required information is not in its Knowledge Base.

Let’s ask the same question to our RAG app with Codex added as an additional tool. Note that we use the updated system prompt and tool list when Codex is integrated in the RAG app.

user_question = "Can I return my simple water bottle?"

rag_response = rag(model=model, user_question=user_question, system_prompt=system_prompt_with_codex, tools=tool_config_with_codex, messages=messages, knowledgebase_id=KNOWLEDGE_BASE_ID) # Codex is added here

print(f'[RAG response] {rag_response}')

As you see, integrating Codex enables your RAG app to answer questions it originally strugged with, as long as a similar question was already answered in the corresponding Codex Project.

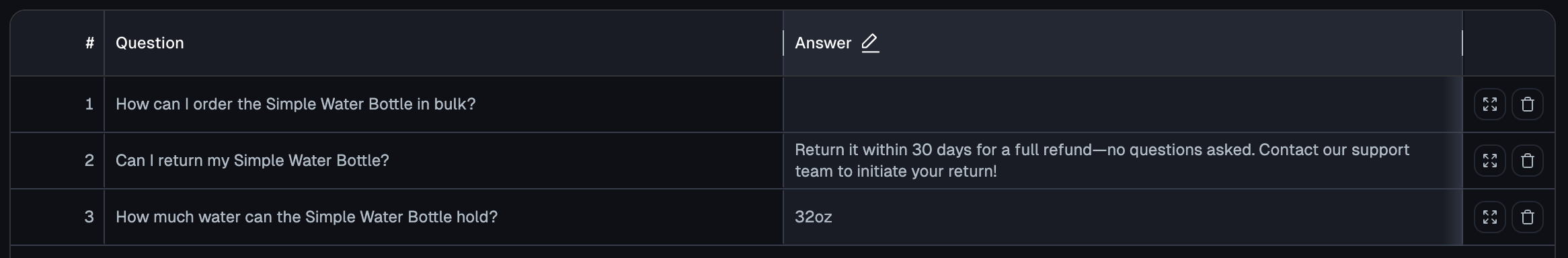

Example 2

Let’s ask another question to our RAG app with Codex integrated.

user_question = "How can I order the Simple Water Bottle in bulk?"

rag_response = rag(model=model, user_question=user_question, system_prompt=system_prompt_with_codex, tools=tool_config_with_codex, messages=messages, knowledgebase_id=KNOWLEDGE_BASE_ID) # Codex is added here

print(f'[RAG response] {rag_response}')

Our RAG app is unable to answer this question because there is no relevant information in its Knowledge Base, nor has a similar question been answered in the Codex Project (see the contents of the Codex Project above).

Codex automatically recognizes this question could not be answered and logs it into the Project where it awaits an answer from a SME.

As soon as an answer is provided in Codex, our RAG app will be able to answer all similar questions going forward (as seen for the previous query).

Example 3

Let’s ask another query to our RAG app with Codex integrated. This is a query the original RAG app was able to correctly answer without Codex (since the relevant information exists in the Knowledge Base).

user_question = "How big is the water bottle?"

rag_response = rag(model=model, user_question=user_question, system_prompt=system_prompt_with_codex, tools=tool_config_with_codex, messages=messages, knowledgebase_id=KNOWLEDGE_BASE_ID) # Codex is added here

print(f'[RAG response] {rag_response}')

We see that the RAG app with Codex integrated is still able to correctly answer this query. Integrating Codex has no negative effect on questions your original RAG app could answer.

Optional: Review full message history (includes tool calls)

# For educational purposes, we passed `messages` into every RAG call and logged every step in this variable.

for message in messages:

print(message)

{'role': 'user', 'content': [{'text': ' Context 1: Simple Water Bottle - Amber (limited edition launched Jan 1st 2025) A water bottle designed with a perfect blend of functionality and aesthetics in mind. Crafted from high-quality, durable plastic with a sleek honey-colored finish. Price: $24.99 \\nDimensions: 10 inches height x 4 inches width\n \n QUESTION:\n Can I return my simple water bottle?'}]}

{'role': 'assistant', 'content': [{'text': "According to the context provided, the Simple Water Bottle - Amber is a limited edition product that was launched on January 1st, 2025. The context does not mention any return policy for this product. Without further information about the vendor's return policy, I cannot definitively say whether you can return your simple water bottle. The best course of action would be to check with the seller or manufacturer regarding their return and exchange policies."}]}

{'role': 'user', 'content': [{'text': ' Context 1: Simple Water Bottle - Amber (limited edition launched Jan 1st 2025) A water bottle designed with a perfect blend of functionality and aesthetics in mind. Crafted from high-quality, durable plastic with a sleek honey-colored finish. Price: $24.99 \\nDimensions: 10 inches height x 4 inches width\n \n QUESTION:\n Can I return my simple water bottle?'}]}

{'role': 'assistant', 'content': [{'toolUse': {'toolUseId': 'tooluse_RohvzQK7SWmIgQ5kKXXB8Q', 'name': 'consult_codex', 'input': {'question': 'Can I return my simple water bottle?'}}}]}

{'role': 'user', 'content': [{'toolResult': {'toolUseId': 'tooluse_RohvzQK7SWmIgQ5kKXXB8Q', 'content': [{'json': {'response': 'Return it within 30 days for a full refund-- no questions asked. Contact our support team to initiate your return!'}}]}}]}

{'role': 'assistant', 'content': [{'text': 'Based on the information returned from consulting the codex, it seems that the simple water bottle does have a return policy that allows you to return it within 30 days for a full refund. The context did not explicitly state the return policy, but the codex provides that additional detail. Therefore, you should be able to return your simple water bottle if you do so within 30 days of your purchase.'}]}

{'role': 'user', 'content': [{'text': ' Context 1: Simple Water Bottle - Amber (limited edition launched Jan 1st 2025) A water bottle designed with a perfect blend of functionality and aesthetics in mind. Crafted from high-quality, durable plastic with a sleek honey-colored finish. Price: $24.99 \\nDimensions: 10 inches height x 4 inches width\n \n QUESTION:\n How can I order the Simple Water Bottle in bulk?'}]}

{'role': 'assistant', 'content': [{'toolUse': {'toolUseId': 'tooluse_bhr6ejGzTLuiY0MngIySyQ', 'name': 'consult_codex', 'input': {'question': 'How can I order the Simple Water Bottle in bulk?'}}}]}

{'role': 'user', 'content': [{'toolResult': {'toolUseId': 'tooluse_bhr6ejGzTLuiY0MngIySyQ', 'content': [{'text': "Error processing tool: argument of type 'NoneType' is not iterable"}], 'status': 'error'}}]}

{'role': 'assistant', 'content': [{'text': "The context provided does not contain any information about ordering the Simple Water Bottle in bulk. Without additional details about the product's availability or the seller's bulk ordering options, I do not have enough information to provide a complete answer to this question. Based on the available context, I cannot recommend how to order the Simple Water Bottle in bulk. If more details become available, I would be happy to revisit this and try to provide a more helpful response."}]}

{'role': 'user', 'content': [{'text': ' Context 1: Simple Water Bottle - Amber (limited edition launched Jan 1st 2025) A water bottle designed with a perfect blend of functionality and aesthetics in mind. Crafted from high-quality, durable plastic with a sleek honey-colored finish. Price: $24.99 \\nDimensions: 10 inches height x 4 inches width\n \n QUESTION:\n How big is the water bottle?'}]}

{'role': 'assistant', 'content': [{'text': 'According to the context provided, the dimensions of the Simple Water Bottle - Amber are:\n\n10 inches height x 4 inches width\n\nSo the size of this water bottle is 10 inches tall and 4 inches wide.'}]}

Next Steps

Now that Codex is integrated with your RAG app, you and SMEs can open the Codex Project and answer questions logged there to continuously improve your AI.

Adding Codex only improves your RAG app. As seen here, integrating Codex into your RAG app requires minimal extra code. Once integrated, the Codex Project automatically logs all user queries that your original RAG app handles poorly. Using a simple web interface, SMEs at your company can answer the highest priority questions in the Codex Project. As soon as an answer is entered in Codex, your RAG app will be able to properly handle all similar questions encountered in the future

Codex is the fastest way for nontechnical SMEs to directly improve your RAG app. As the Developer, you simply integrate Codex once, and from then on, SMEs can continuously improve how your AI handles common user queries without needing your help. Codex remains compatible with any RAG architecture, so engineers can update your RAG system unhindered.

Need help, more capabilities, or other deployment options? Check the FAQ or email us at: support@cleanlab.ai