Integrating Codex as-a-Backup with your RAG app - Advanced Options

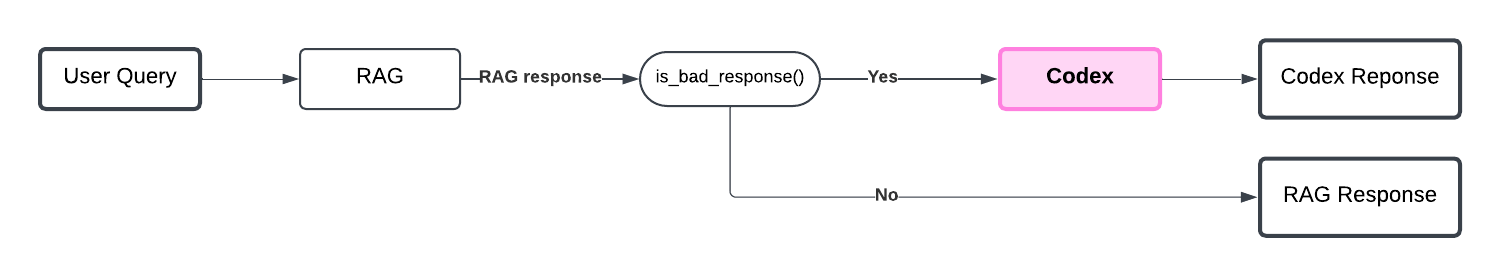

Recall that integrating Codex as-a-Backup enables your RAG app to prevent bad responses to certain queries. Codex automatically detects queries where your RAG produces a bad response, and logs them for SMEs to answer. Once a desired answer is provided via the Codex Interface, your RAG app will be able to properly respond to similar queries going forward.

What constitutes a bad response, and how are they detected? This tutorial walks through advanced configurations for you to control these factors, better understand the Codex as-a-Backup integration, and how to best implement it for your application. We’ll go through several methods for detecting bad responses from your RAG app, that warrant a backup response from a Codex instead.

Let’s install/import required packages for this tutorial, which will use OpenAI LLMs.

%pip install openai # we used package-version 1.59.7

%pip install thefuzz # we used package-version 0.22.1

%pip install --upgrade cleanlab-codex

import openai

import os

os.environ["OPENAI_API_KEY"] = "<YOUR-KEY-HERE>" # Replace with your OpenAI API key

client = openai.OpenAI()

Create Codex Project

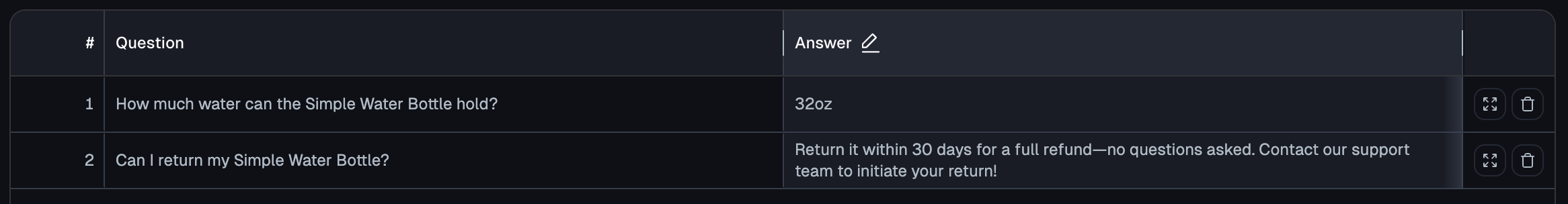

To later use Codex, we must first create a Project. Here we assume some (question, answer) pairs have already been added to the Codex Project. To learn how that was done, see our tutorial: Populating Codex.

Our existing Codex Project contains the following entries:

User queries where Codex detected a bad response from your RAG app will be logged in this Project for SMEs to later answer.

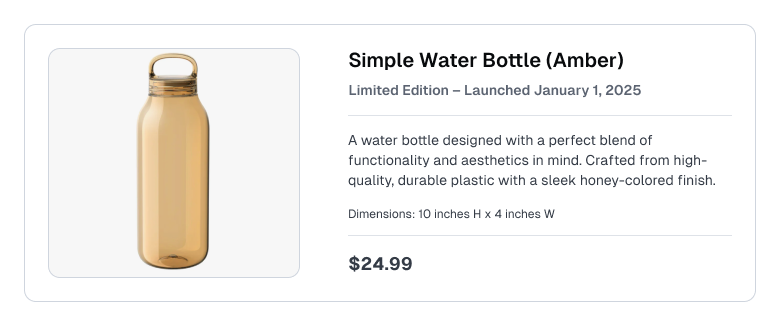

Example RAG App: Product Customer Support

Consider a customer support / e-commerce RAG use-case where the Knowledge Base contains product listings like the following:

Here consider a basic single-turn (Q&A) RAG app, its details aren’t important.

To keep our app minimal, we mock the retrieval step (you can easily replace our mock retrieval with actual search over a Knowledge Base or Vector Database). In our mock retrieval, the same context (product information) will always be returned for every user query. After retrieval, the next step in RAG is to combine the retrieved context with the user query into a LLM prompt that is used to generate a response. Our LLM is instructed to ground its answers in the retrieved context, but also to abstain when it doesn’t know the answer.

Optional: Define helper methods for RAG

def format_prompt(context: str, query: str) -> str:

"""How prompts are formatted based on retrieved context and the user's query for your RAG LLM """

return f"Context: {context}\nQuery: {query}"

def RAG(llm):

def query(query: str) -> tuple[str, str]:

"""Answer the query using RAG"""

retrieved_context = """Simple Water Bottle - Amber (limited edition launched Jan 1st 2025)

A water bottle designed with a perfect blend of functionality and aesthetics in mind. Crafted from high-quality, durable plastic with a sleek honey-colored finish.

Price: $24.99 \nDimensions: 10 inches height x 4 inches width""" # toy retrieval that returns hardcoded context for demonstration purposes (replace with your actual retrieval step)

prompt = format_prompt(retrieved_context, query)

response = llm(prompt)

return (response, retrieved_context)

return query

fallback_answer = "I cannot answer."

system_message = {"role": "system", "content": f"Answer questions based on the given context. If the information isn't explicitly stated in the context, respond with '{fallback_answer}'."}

# Configure a basic querying function with OpenAI

llm = lambda query: client.chat.completions.create(

model="gpt-4o-mini", # or another valid OpenAI model

messages=[system_message, {"role": "user", "content": query}]

).choices[0].message.content

rag = RAG(llm)

Let’s ask some queries to our RAG app.

easy_query = "How big is the Simple Water Bottle?"

response, context = rag(easy_query)

print(response)

query = "Can I return my simple water bottle?"

response, context = rag(query)

print(response)

For the remainder of this tutorial, all queries to the RAG app will be this question about returns. This information is not available in our RAG app’s Knowledge Base (consisting of just the above product description). The particular Codex project we integrate with does already contain a SME-provided answer to this question.

Detecting Bad RAG Responses with Codex

We’ll go through several ways to detect bad RAG responses using the cleanlab_codex client library.

In a Codex as-a-Backup integration: Codex automatically evaluates whether the response from the original LLM is bad, to decide whether that response should be replaced if Codex contains an answer to a similar query. To implement this integration: run each query through your existing RAG app, check the RAG response with helper methods from Codex, query Codex if necessary, and override your RAG response with the Codex answer (including in the message history) if necessary.

For advanced response checking, we’ll use Cleanlab’s Trustworthy Language Model (TLM). TLM automatically detects untrustworthy (potentially incorrect) LLM responses as well as those which are unhelpful, enabling Codex to more intelligently decide when to provide backup responses.

Optional: Configure Codex and TLM

from cleanlab_codex import Project

from cleanlab_codex.types.tlm import TLMConfig, TLMOptions

# Configure Codex

os.environ["CODEX_ACCESS_KEY"] = "<YOUR_ACCESS_KEY>" # Get your access key from your Codex Project's page at: https://codex.cleanlab.ai/

codex_project = Project.from_access_key(os.environ["CODEX_ACCESS_KEY"])

# Configure TLM

# This step is optional. If omitted, the default TLM configuration will be used.

# Here, we provide a custom configuration for illustrative purposes.

# See the TLM documentation for more information on when you may want to provide a custom configuration:

# https://help.cleanlab.ai/tlm/tutorials/tlm_advanced/#optional-tlm-configurations-for-betterfaster-results

tlm_config = TLMConfig(quality_preset="low", options=TLMOptions(model="gpt-4o-mini"))

Detect all sorts of bad responses

The primary method, is_bad_response(), comprehensively assesses RAG responses for different issues. It evaluates whether a RAG response is suitable for the given query and context, helping you identify when your RAG system would benefit from a backup answer.

Here’s how to run your RAG app with Codex using this approach, here asking the same query that the RAG app could not answer above.

from cleanlab_codex.response_validation import is_bad_response

response, context = rag(query)

is_bad = is_bad_response(

response,

# Optional arguments below

context=context, # Context used by RAG

query=query, # Original user query

config={

"tlm_config": tlm_config,

"format_prompt": format_prompt, # Your prompt formatting function

}

)

if is_bad: # See if Codex has an answer

codex_response, _ = codex_project.query(

query,

fallback_answer=fallback_answer,

)

if codex_response: # Codex was able to answer

response = codex_response

# Note: in conversational rather than single-turn Q&A applications, you additionally should overwrite response ← codex_response in the chat message history before returning the overwritten response.

print(response)

This query is now properly answered by the RAG app with Codex integrated as-a-Backup. That means the original RAG response was detected as bad, Codex was consulted, and it provided a desired answer for this query.

Let’s understand how the response was detected as bad. Codex’s is_bad_response() method is a comprehensive detector that evaluates the response for various issues. This method can take in many optional arguments, above we showcase recommended arguments to include such as the: user query, context that was retrieved, the function you use to format prompts based on query+context for your LLM, … Providing more arguments enables this method to detect more types of response issues (try to provide the same information that was provided to your LLM for generating the RAG response).

Codex’s is_bad_response() method considers various types of issues that can be individually detected by the more narrow methods below. Whenever you provide the required methods for each of these narrow methods to is_bad_response(), this comprehensive method will consider that type of issue in its audit. is_bad_response() returns True whenever any one of the more narrow methods that was run detected a specific type of issue. We describe each of the narrow methods below, and demonstrate how to run Codex as-a-Backup with each of these more specific detectors (in case you want Codex to fix only a certain type of issue in your RAG app).

Detect fallback responses

RAG apps often abstain from answering, i.e. return a fallback answer along the lines of “I don’t know”, “I cannot answer”, or “No information available”. This can frustrate your users, and may happen whenever: the Knowledge Base is missing necesary information, your retrieval/search failed to find the right information, or the query or retrieved context appears too complex for the LLM to know how to handle.

Codex’s is_fallback_response() method detects when a response resembles a fallback rather than a real attempt at an answer. Here’s how to run your RAG app integrating Codex as-a-Backup with this more narrow detector.

from cleanlab_codex.response_validation import is_fallback_response

response, _ = rag(query)

is_bad = is_fallback_response(

response,

# Optional arguments below

threshold=0.6, # Threshold for string similarity against fallback answer (0.0-1.0), larger values reduce the number of detections

fallback_answer=fallback_answer, # Known fallback answer to match against (ideally specified in your RAG system prompt)

)

if is_bad:

codex_response, _ = codex_project.query(

query,

fallback_answer=fallback_answer,

)

if codex_response:

response = codex_response

print(response)

This detector uses fuzzy string matching to determine if the RAG response resembles your specified fallback answer. Here, Codex detected that the RAG response matches our RAG app’s fallback answer, and thus Codex was instead used to answer this query.

Detect untrustworthy responses

Another option is to detect LLM outputs that are untrustworthy with low confidence of being good/correct.

Untrustworthy responses arise from: LLM hallucinations, missing context, or complex queries/context that your LLM is uncertain how to handle.

Cleanlab’s Trustworthy Language Model (TLM) can evaluate the trustworthiness of the response based on the provided query and context as well as any system instructions you are providing to your LLM.

Codex’s is_untrustworthy_response() method detects when a response is untrustworthy. Here’s how to run your RAG app integrating Codex as-a-Backup with this more narrow detector.

from cleanlab_codex.response_validation import is_untrustworthy_response

# Reminder: query = "Can I return my simple water bottle?"

# Here we mock a bad response instead of calling `response, context = rag(query)`

response = "The Simple Water Bottle is free of charge." # This is a factually incorrect and irrelevant response.

is_bad = is_untrustworthy_response(

response,

query=query, # User query

context=context, # Retrieved context from RAG

# Optional arguments below

trustworthiness_threshold=0.6, # Threshold for trustworthiness score, lower values reduce the number of detections

format_prompt=format_prompt, # Function you use to format prompt for your LLM based on: query, context, and system instructions (should match your RAG prompt)

tlm_config=tlm_config, # Optional custom TLM configuration

)

if is_bad:

codex_response, _ = codex_project.query(

query,

fallback_answer=fallback_answer,

)

if codex_response:

response = codex_response

print(response)

Here, Codex detected that the above response was not a confidently good answer for the query, and thus Codex was instead used to answer this query.

Detect unhelpful responses

Another option is to detect RAG responses that do not seem helpful.

This is done by relying on TLM to classify the helpfulness of the response and considering its confidence-level.

This approach is useful if the retrieved context is not easily accessible in your RAG app.

Codex’s is_unhelpful_response() method detects when a response appears unhelpful with high confidence. Here’s how to run your RAG app integrating Codex as-a-Backup with this more narrow detector.

from cleanlab_codex.response_validation import is_unhelpful_response

response, _ = rag(query)

is_bad = is_unhelpful_response(

response,

query=query, # User query

# Optional arguments below

confidence_score_threshold=0.6, # Higher threshold requires TLM to be more certain before flagging responses as unhelpful

tlm_config=tlm_config, # Optional custom TLM configuration

)

if is_bad:

codex_response, _ = codex_project.query(

query,

fallback_answer=fallback_answer,

)

if codex_response:

response = codex_response

print(response)

Here, Codex detected that the RAG response does not appear helpful (recall our original RAG app responded: ‘I cannot answer.’). Thus Codex was instead used to answer this query.

Next Steps

Now that Codex is integrated with your RAG app, you and SMEs can open the Codex Project and answer questions logged there to continuously improve your AI.

This tutorial explored various approaches for detecting bad RAG responses, which are combined in Codex’s is_bad_response() method. While the detection methods vary based on the type of issues you are concerned about, the pattern for integrating Codex as-a-Backup remains consistent across different detectors.

Here the query detected to be poorly handled by our RAG system already had a SME-provided answer in the Codex Project integrated as-a-Backup, enabling your RAG app to properly respond. If no answer is available in Codex, then your RAG app simply returns its original response, and our detector automatically logs the poorly-handled query into the Codex Project. As soon as a SME answers this query, your RAG app will be able to properly handle all similar queries going forward.

You can select and configure the detection strategy that best suits your application’s needs. Whether implementing individual checks or using the comprehensive is_bad_response() detector, the end goal is the same: identifying cases where Codex can provide expert backup answers to reduce the rate of bad responses your users receive.